MCGraph: Multi-Criterion Representation for Scene Understanding

- Moos Hueting*

- Aron Monszpart*

- Nicolas Mellado

University College London

Siggraph Asia 2014 Workshop on Indoor Scene Understanding: Where Graphics meets Vision

* Joint first authors

Abstract

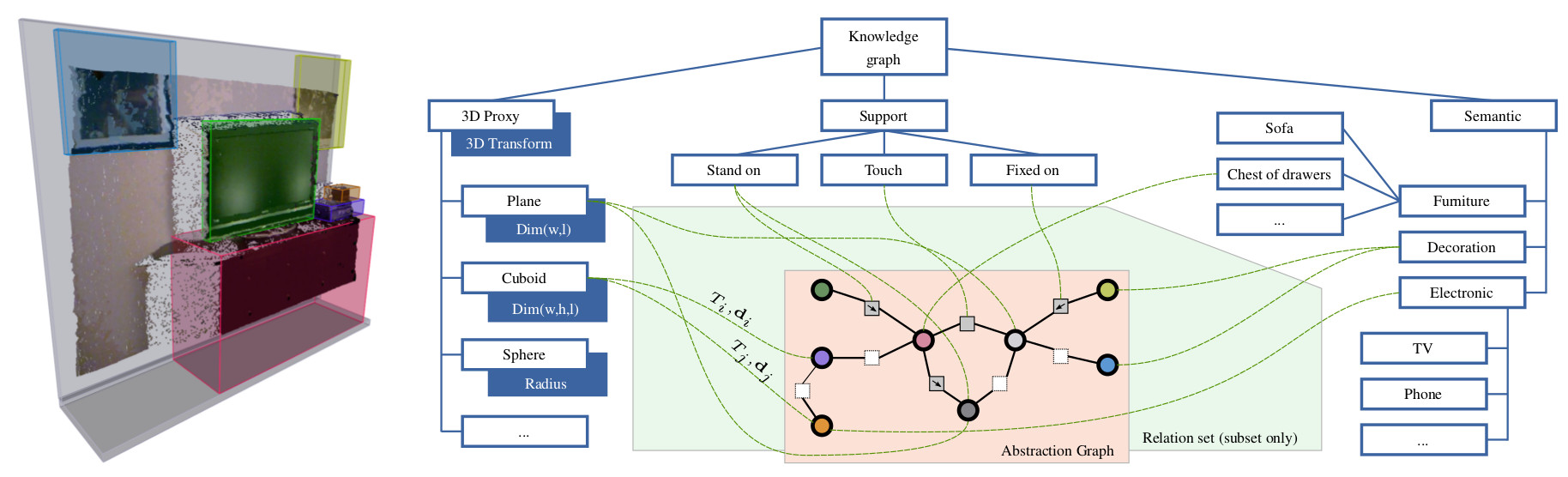

The field of scene understanding endeavours to extract a broad range of information from 3D scenes. Current approaches exploit one or at most a few different criteria (e.g., spatial, semantic, functional information) simultaneously for analysis. We argue that to take scene understanding to the next level of performance, we need to take into account many different, and possibly previously unconsidered types of knowledge simultaneously. A unified representation for this type of processing is as of yet missing. In this work we propose MCGraph: a unified multi-criterion data representation for understanding and processing of large-scale 3D scenes. Scene abstraction and prior knowledge are kept separated, but highly connected. For this purpose, primitives (i.e., proxies) and their relationships (e.g., contact, support, hierarchical) are stored in an abstraction graph, while the different categories of prior knowledge necessary for processing are stored separately in a knowledge graph. These graphs complement each other bidirectionally, and are processed concurrently. We illustrate our approach by expressing previous techniques using our formulation, and present promising avenues of research opened up by using such a representation. We also distribute a set of MCGraph annotations for a small number of NYU2 scenes, to be used as ground truth multi-criterion abstractions.