An Image Degradation Model for Depth-augmented Image Editing

- James W. Hennessey

- Niloy J. Mitra

University College London

Symposium on Geometry Processing 2015

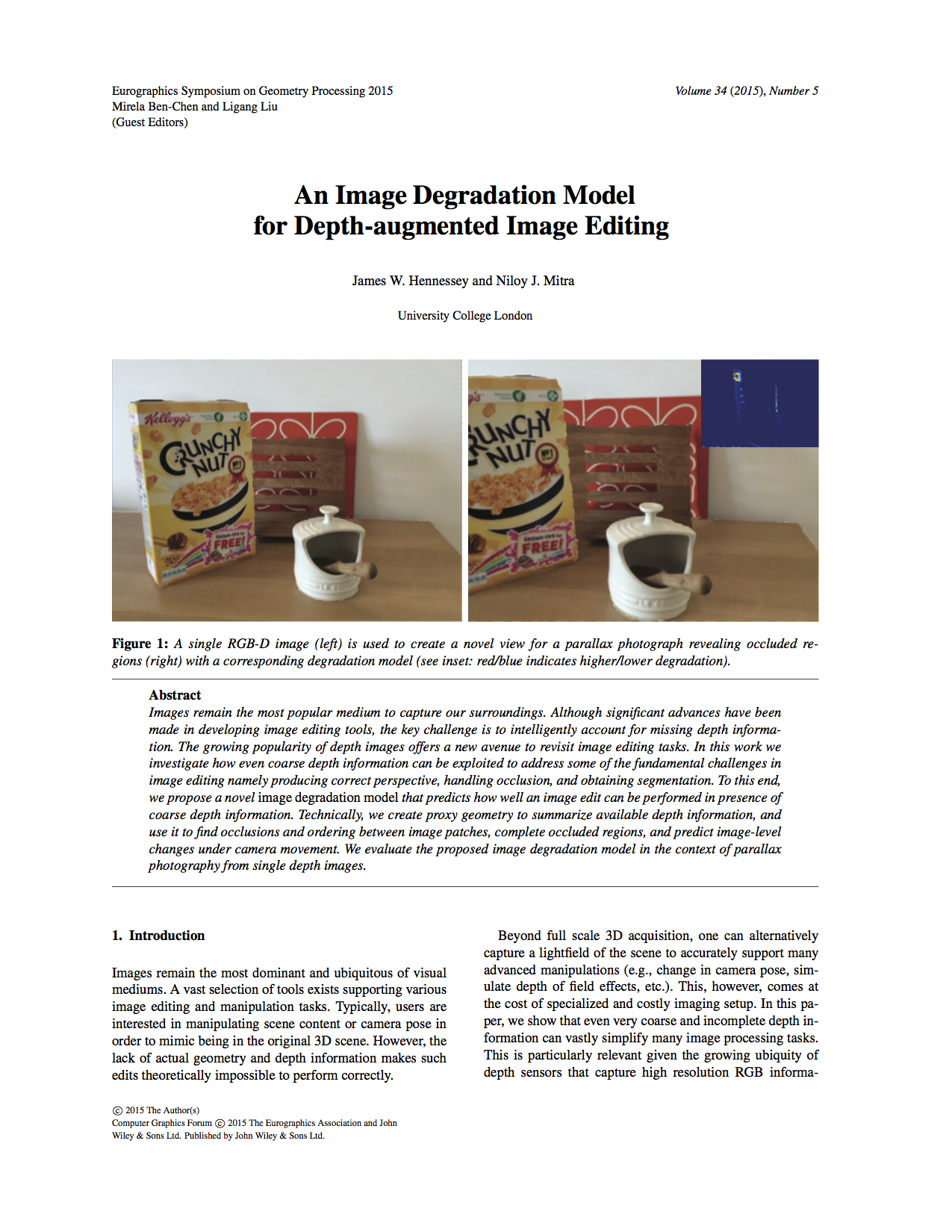

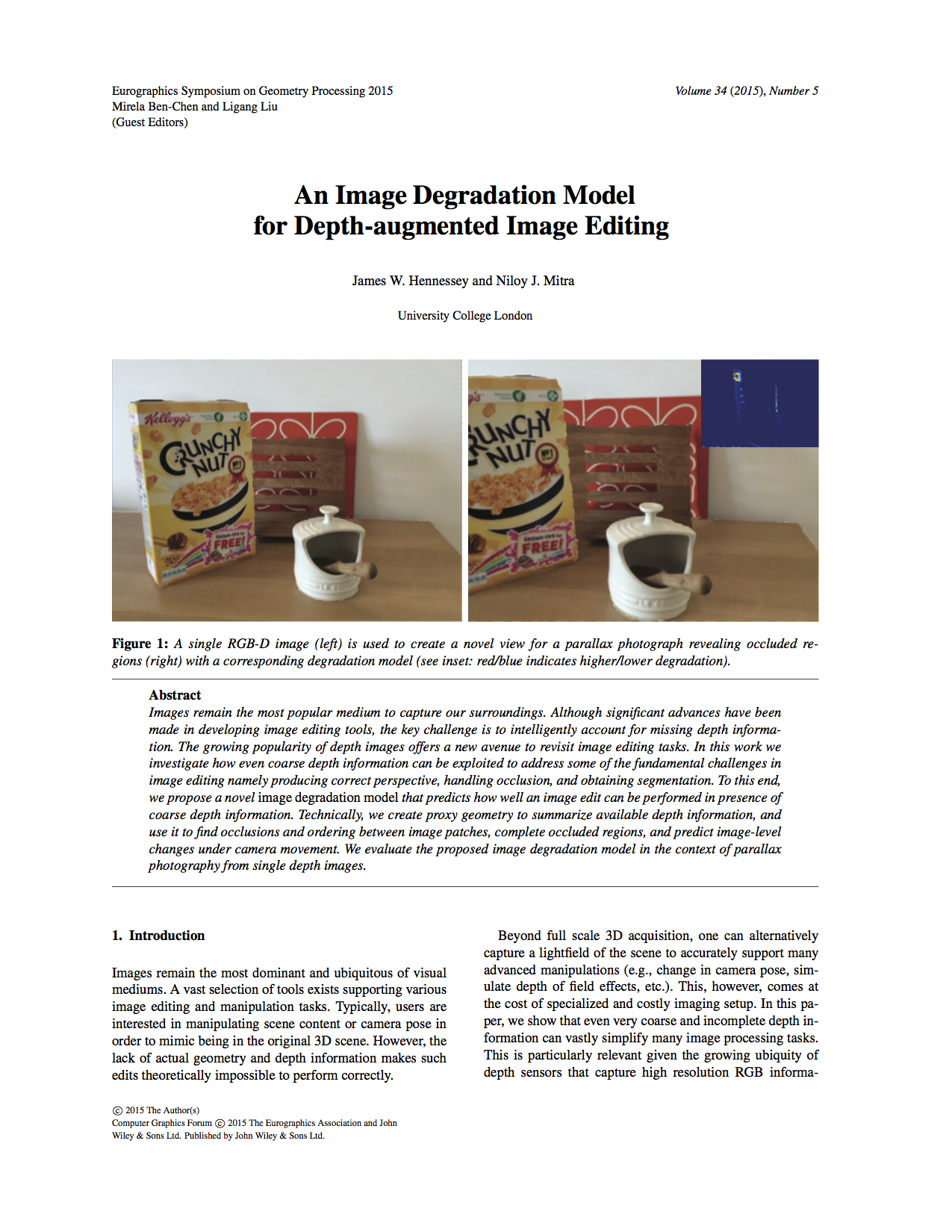

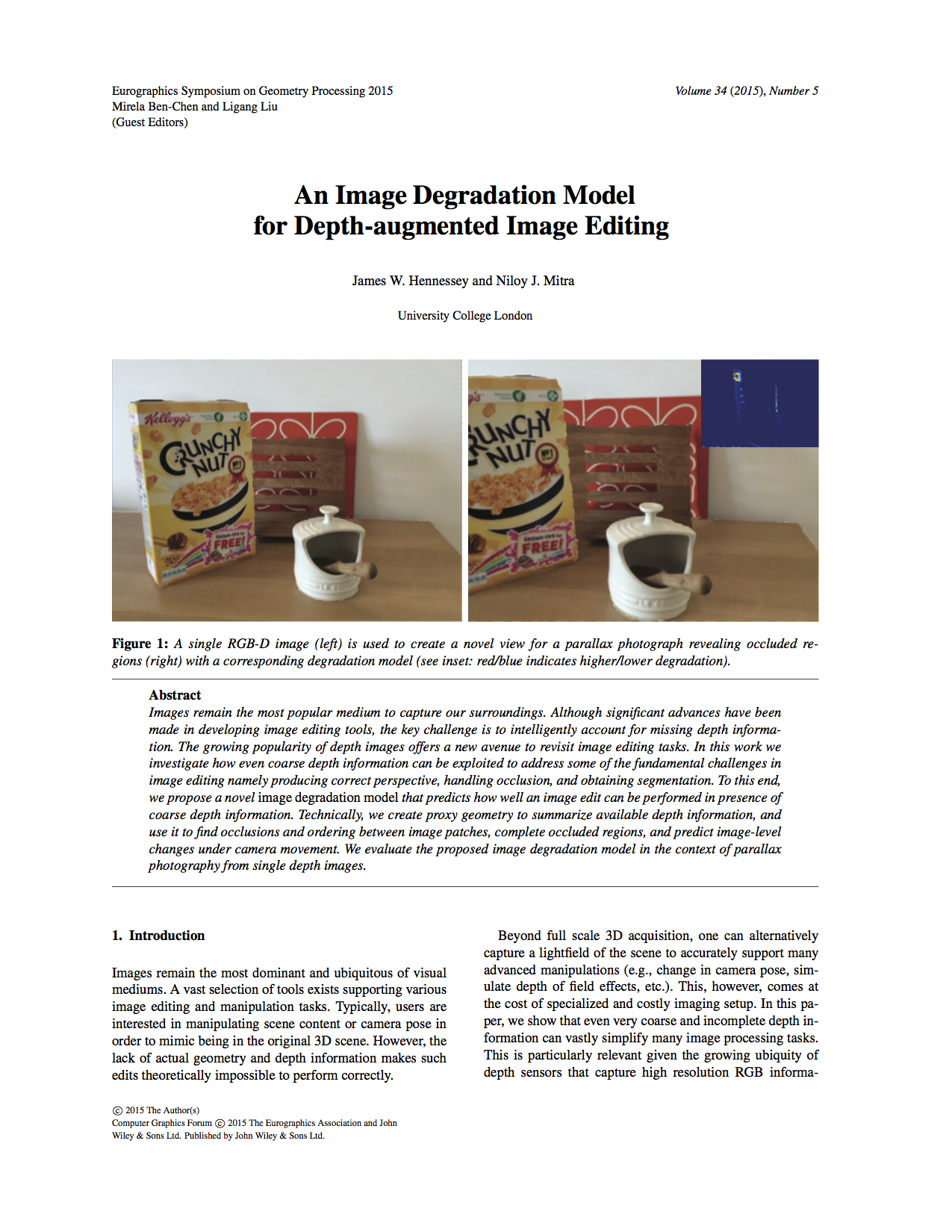

Figure 1: A single RGB-D image~(left) is used to create a novel view for a parallax photograph revealing occluded regions~(right) with a corresponding degradation model~(see inset: red/blue indicates higher/lower degradation).

Abstract

Images remain the most popular medium to capture our surroundings. Although significant advances have been made in developing image editing tools, the key challenge is to intelligently account for missing depth information.

The growing popularity of depth images offers a new avenue to revisit image editing tasks. In this work we investigate how even coarse depth information can be exploited to address some of the

fundamental challenges in image editing namely producing correct perspective, handling occlusion, and obtaining segmentation.

To this end, we propose a novel image degradation model that predicts how well an image edit can be performed in presence of coarse depth information.

Technically, we create proxy geometry to summarize available depth information, and use it to find occlusions and ordering between image patches, complete occluded regions, and predict image-level changes under camera movement.

We evaluate the proposed image degradation model in the context of parallax photography from single depth images.

Method Overview

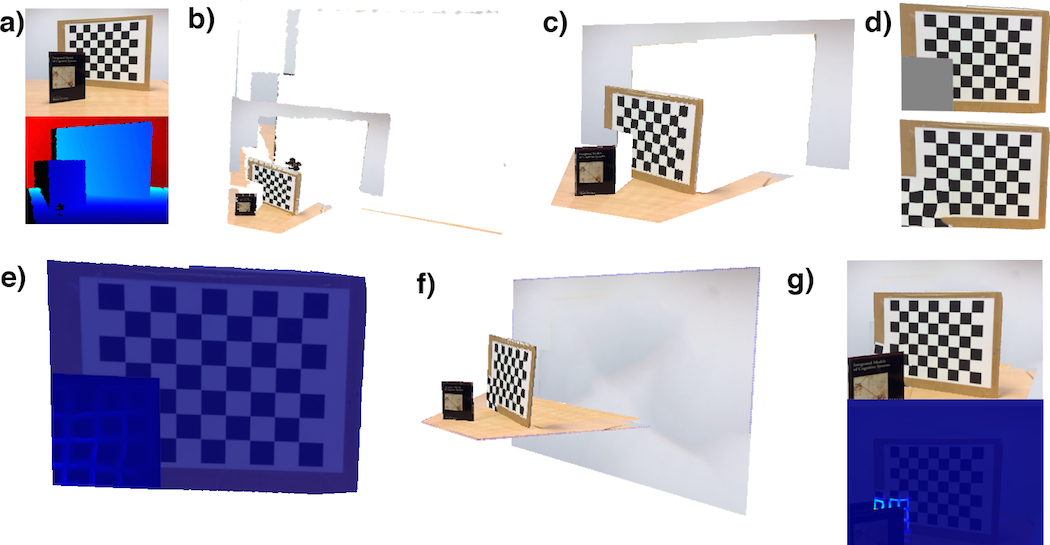

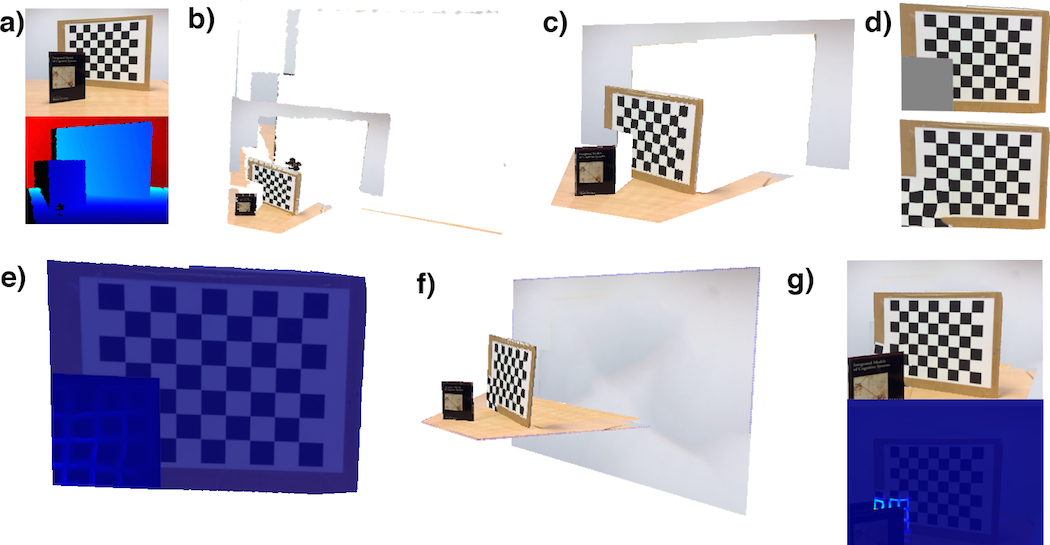

Figure 2: (a)~Input RGB + depth image; (b)~incomplete point cloud with noisy data (note the misalignment of RGB and depth); (c)~segmentation, primitive fitting, and depth completion; (d)~occlusions identified and infilled using the primitives (shown for one segment); (e)~degradation model built for infilled pixels; (f)~decomposed and completed layered scene; and, (e)~new view synthesized from user defined camera pose is flagged by the degradation model as undesirable.

Results

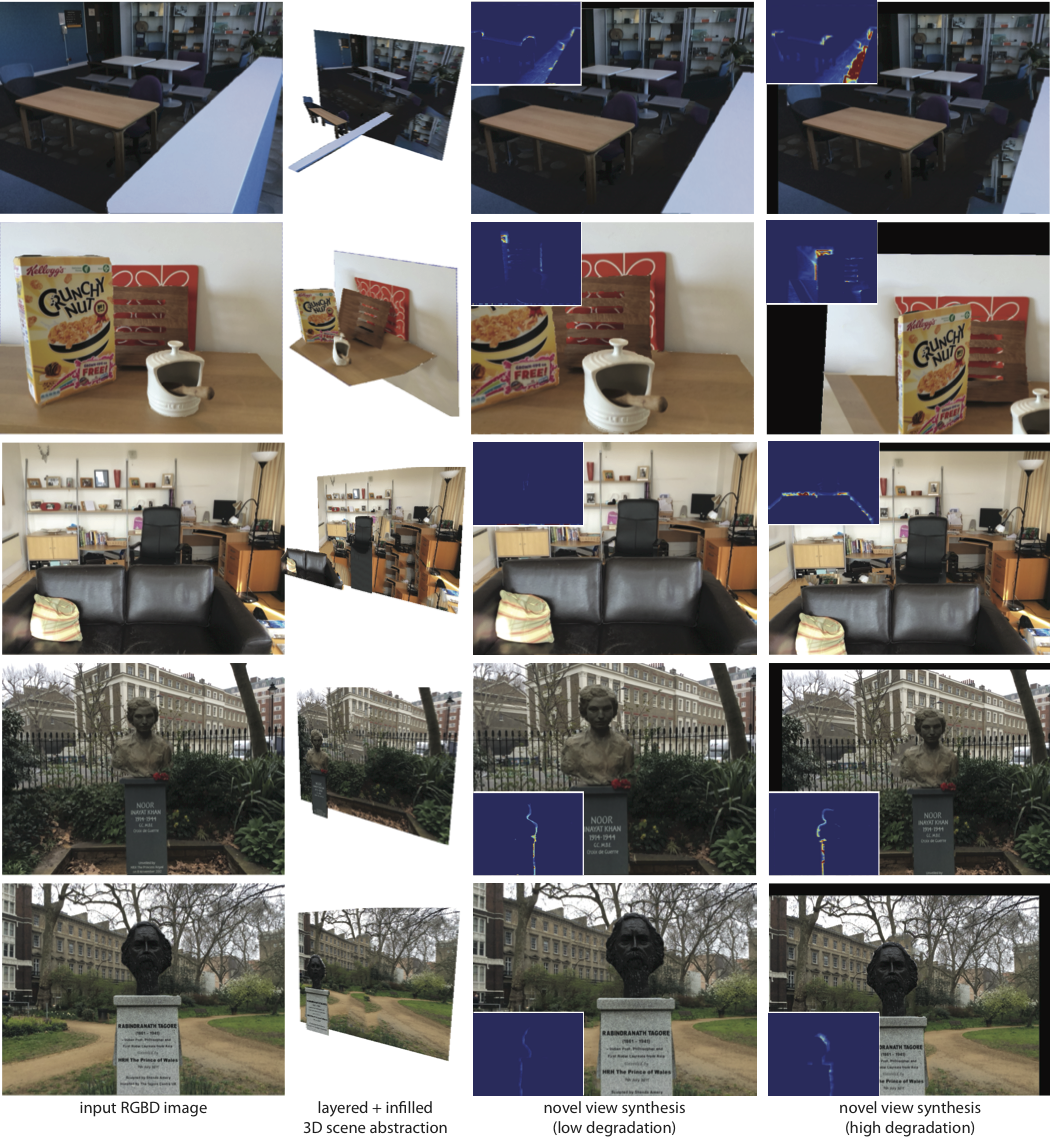

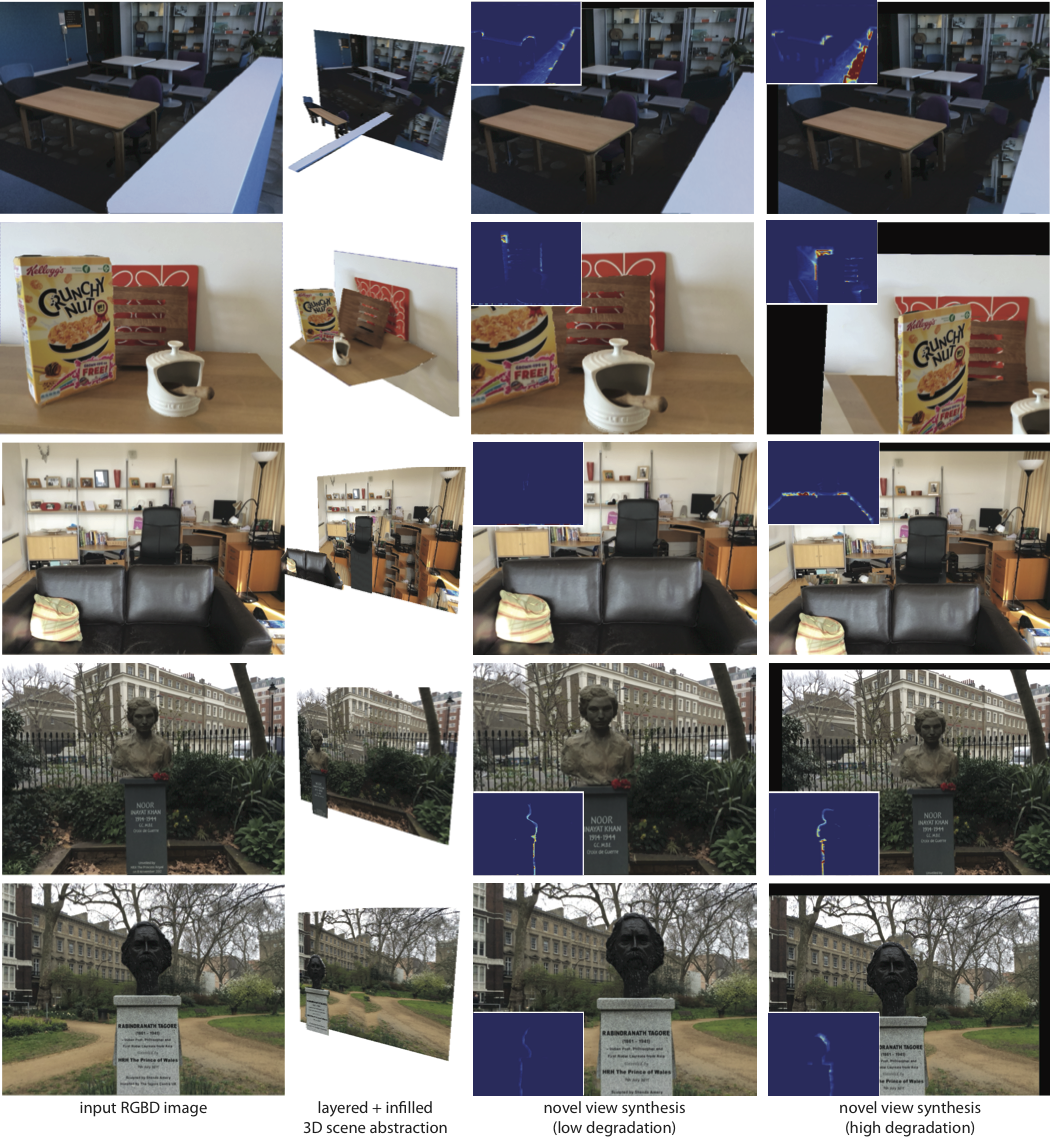

Figure 6:

From top to bottom scenes: office, kitchen, living room, park1, park2. In each row, we show the input

RGBD image, the abstracted layered scene, novel view synthesis with low degradation (inset showing degradation map),

novel view synthesis with high degradation (inset showing degradation map), respectively.

Bibtex

@article{Hennessey:2015,

title = {An Image Degradation Model for Depth-augmented Image Editing},

author = {James W. Hennessey and Niloy J. Mitra},

year = {2015},

journal = {{Symposium on Geometry Processing 2015}}

}

Acknowledgements

We thank the reviewers for their comments and suggestions

for improving the paper. We would like to thank Moos Hueting and Aron Monszpart for their invaluable comments, support and discussions. This work was supported in part by ERC Starting Grant SmartGeometry (StG-2013-335373) and gifts from Adobe.

Links

Paper (22MB)

Paper Compressed (12MB)

Supplementary Video (ZIP 150.0MB)

Slides (16MB)