DepthCut: Improved Depth Edge Estimation Using Multiple Unreliable Channels

- Paul Guerrero1

- Holger Winnemöller2

- Wilmot Li2

- Niloy J. Mitra1

1University College London 2Adobe Research

CGI 2018

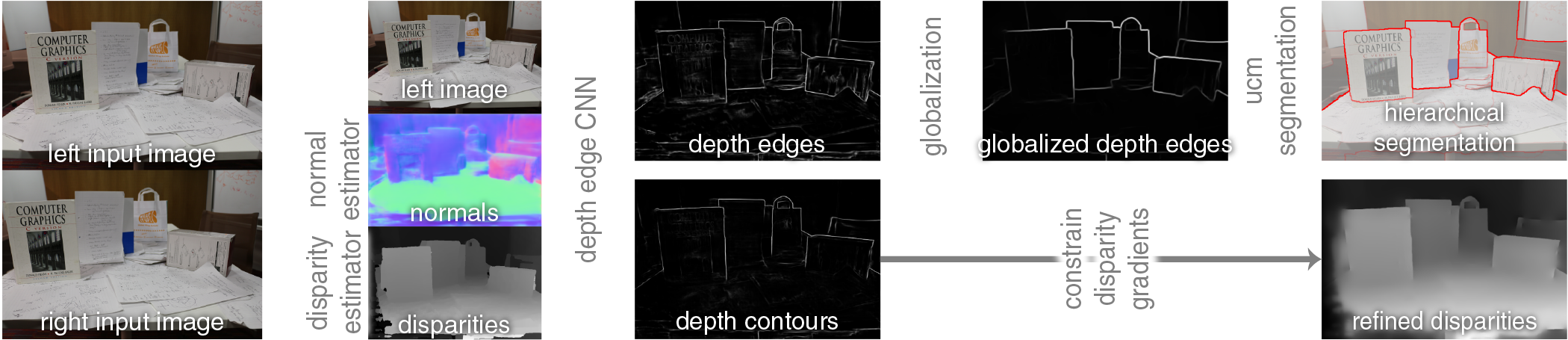

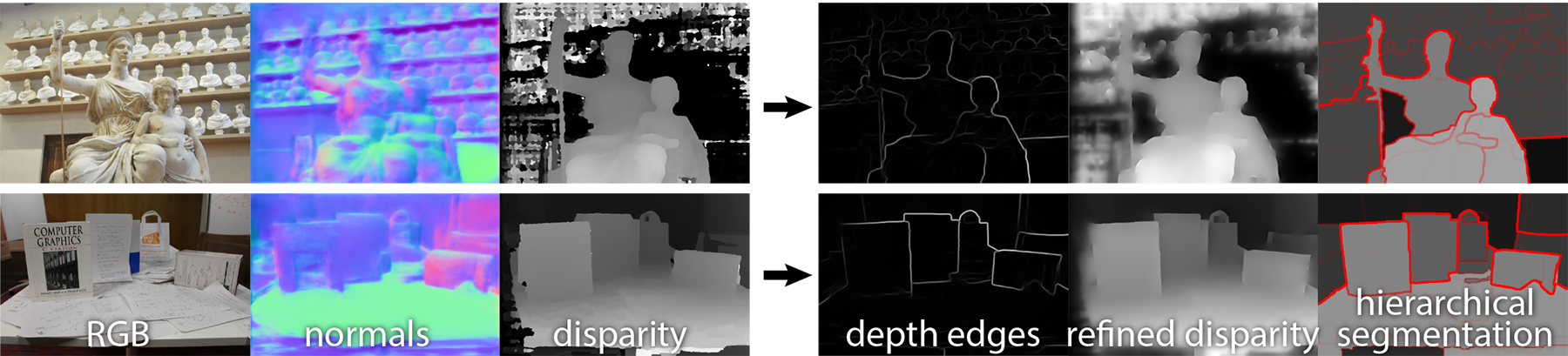

We present DepthCut, a method to estimate depth edges with improved accuracy from unreliable input channels, namely: RGB images, normal estimates, and disparity estimates. Starting from a single image or pair of images, our method produces depth edges consisting of depth contours and creases, and separates regions of smoothly varying depth. Complementary information from the unreliable input channels are fused using a neural network trained on a dataset with known depth. The resulting depth edges can be used to refine a disparity estimate or to infer a hierarchical image segmentation.

Abstract

In the context of scene understanding, a variety of methods exists to estimate different information channels from mono or stereo images, including disparity, depth, and normals. Although several advances have been reported in the recent years for these tasks, the estimated information is often imprecise particularly near depth discontinuities or creases. Studies have however shown that precisely such depth edges carry critical cues for the perception of shape, and play important roles in tasks like depth-based segmentation or foreground selection. Unfortunately, the currently extracted channels often carry conflicting signals, making it difficult for subsequent applications to effectively use them. In this paper, we focus on the problem of obtaining high-precision depth edges (i.e., depth contours and creases) by jointly analyzing such unreliable information channels. We propose DepthCut, a data-driven fusion of the channels using a convolutional neural network trained on a large dataset with known depth. The resulting depth edges can be used for segmentation, decomposing a scene into depth layers with relatively flat depth, or improving the accuracy of the depth estimate near depth edges by constraining its gradients to agree with these edges. Quantitatively, we compare against 15 variants of baselines and demonstrate that our depth edges result in an improved segmentation performance and an improved depth estimate near depth edges compared to data-agnostic channel fusion. Qualitatively, we demonstrate that the depth edges result in superior segmentation and depth orderings.

Motivation

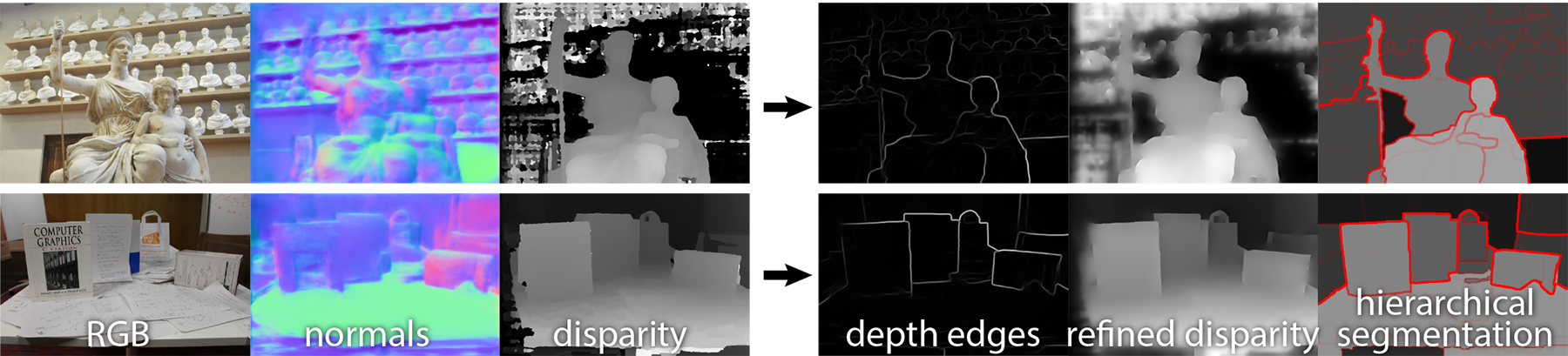

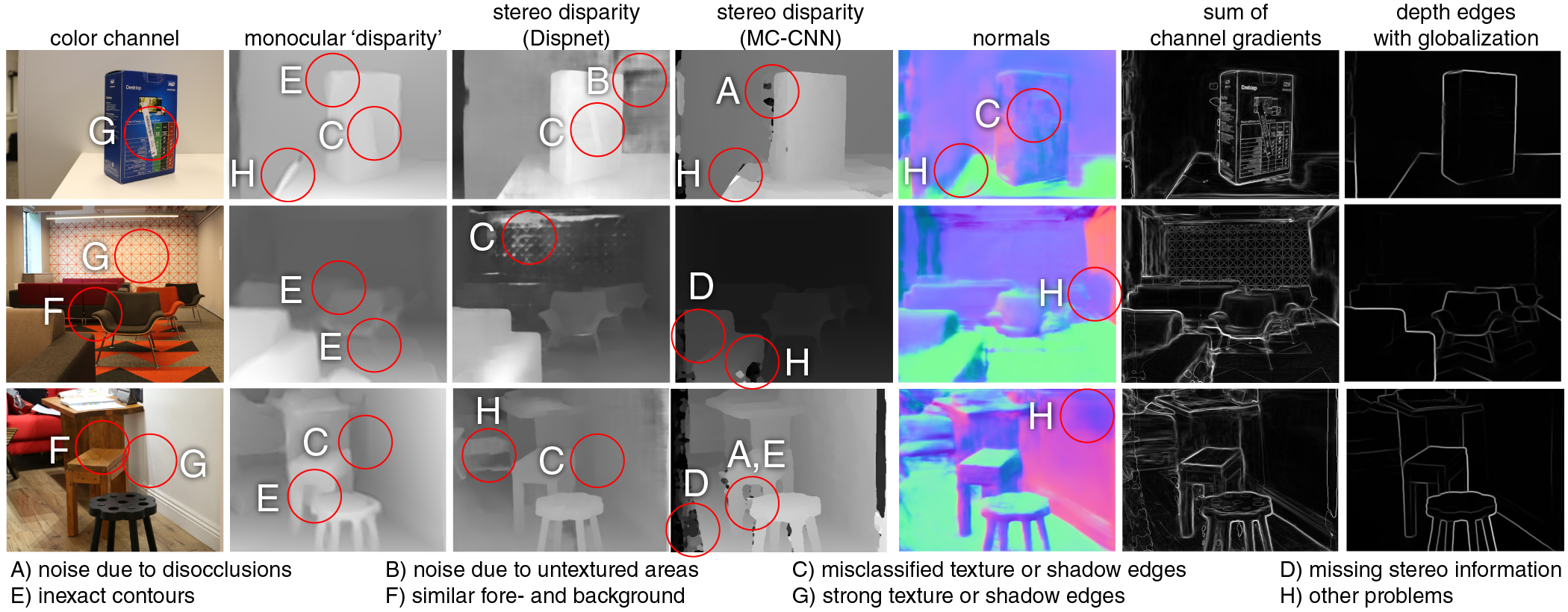

Our input channels contain various sources of noise and errors: areas of disocclusion, large untextured areas where stereo matching is difficult, and shadow edges that were incorrectly classified during creation of the channels. The color channel may also contain strong texture or shadow edges that have to be filtered out. The gradients of these channels do generally not align well, as shown in the second column from the right. We train DepthCut to learn how to combine color, disparity and normal channels to generate a cleaner set of depth edges, shown in the last column after a globalization step.

Overview

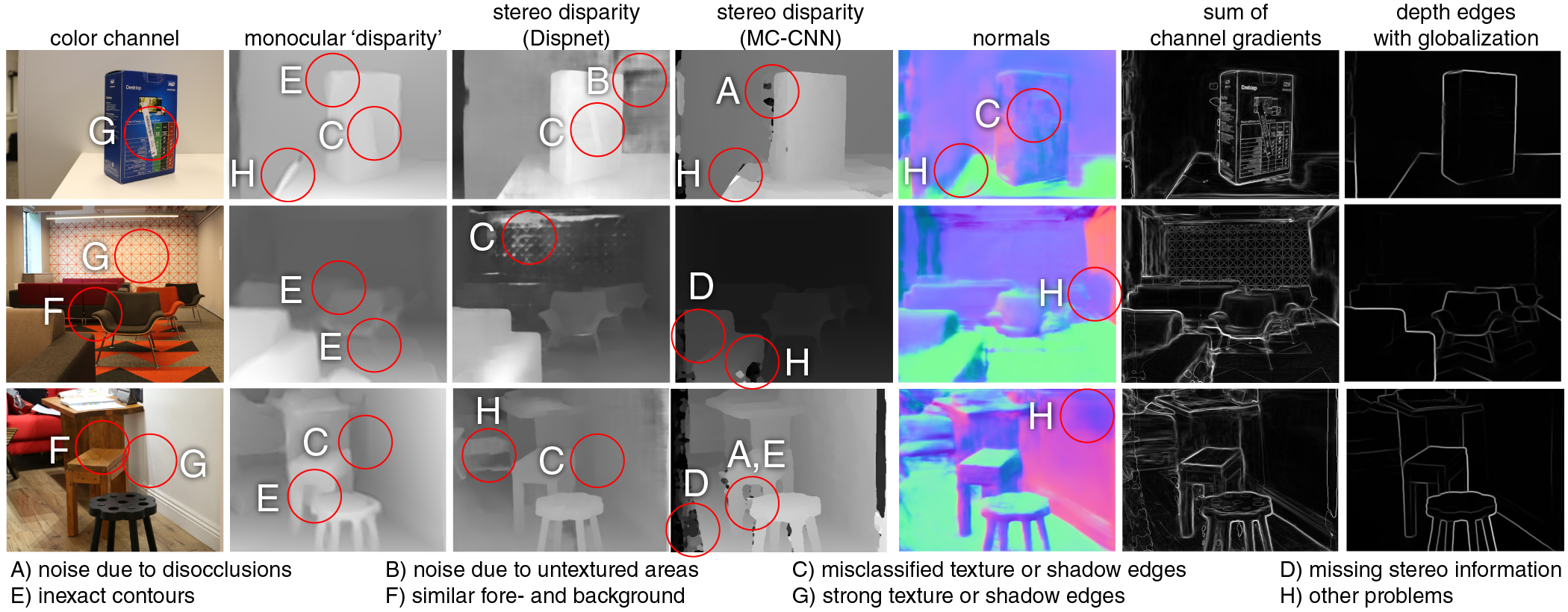

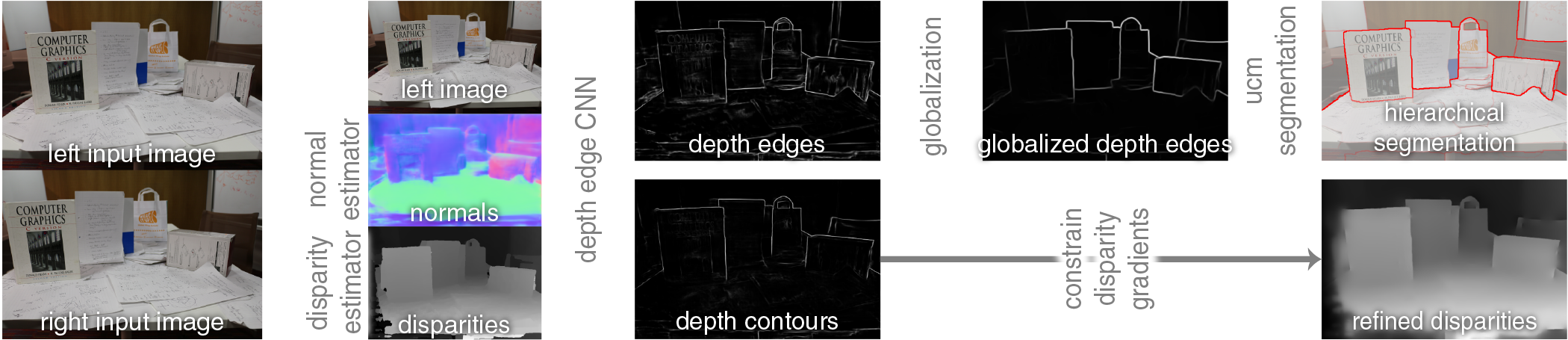

Overview of our method and two applications. Starting from a stereo image pair, or a single image for monocular disparity estimation, we estimate our three input channels using any existing method for normal or disparity estimation. These channels are combined in a data-driven fusion using our CNN to get a set of depth edges, which are then used in two applications: segmentation and refinement of the estimated disparity (for the latter, see the supplementary material). For segmentation, we perform a globalization step that keeps only the most consistent contours, followed by the construction of a hierarchical segmentation using the gPb-ucm framework. For refinement, we use depth contours only (not creases) and use them to constrain the disparity gradients.

Results

Hierarchical segmentations based on depth edges. We compare directly segmenting the individual channels, performing a data-agnostic fusion, and using our data driven fusion on either a subset of the input channels, or all of the input channels. Strongly textured regions in the color channel make finding a good segmentation difficult, while normal and disparity estimates are too unreliable to use exclusively. Using our data-driven fusion gives segmentations that better correspond to scene objects.

Bibtex

@article{GuerreroEtAl:DepthCut:CGI:2018,

title = {{DepthCut}: Improved Depth Edge Estimation Using Multiple Unreliable Channels},

author = {Paul Guerrero and Holger Winnem{\"o}ller and Wilmot Li and Niloy J. Mitra},

year = {2018},

journal = {The Visual Computer},

volume = {34},

number = {9},

pages = {1165-1176},

issn = {1432-2315},

doi = {10.1007/s00371-018-1551-5},

}

Acknowledgements

This work was supported by the ERC Starting Grant SmartGeometry (StG-2013-335373), the Open3D Project (EPSRC Grant EP/M013685/1), and gifts from Adobe.