Learning a Shared Shape Space for Multimodal Garment Design

- Tuanfeng Y. Wang1

- Duygu Ceylan2

- Jovan Popovic2

- Niloy J. Mitra1

1University College London 2Adobe Research

SIGGRAPH Asia 2018

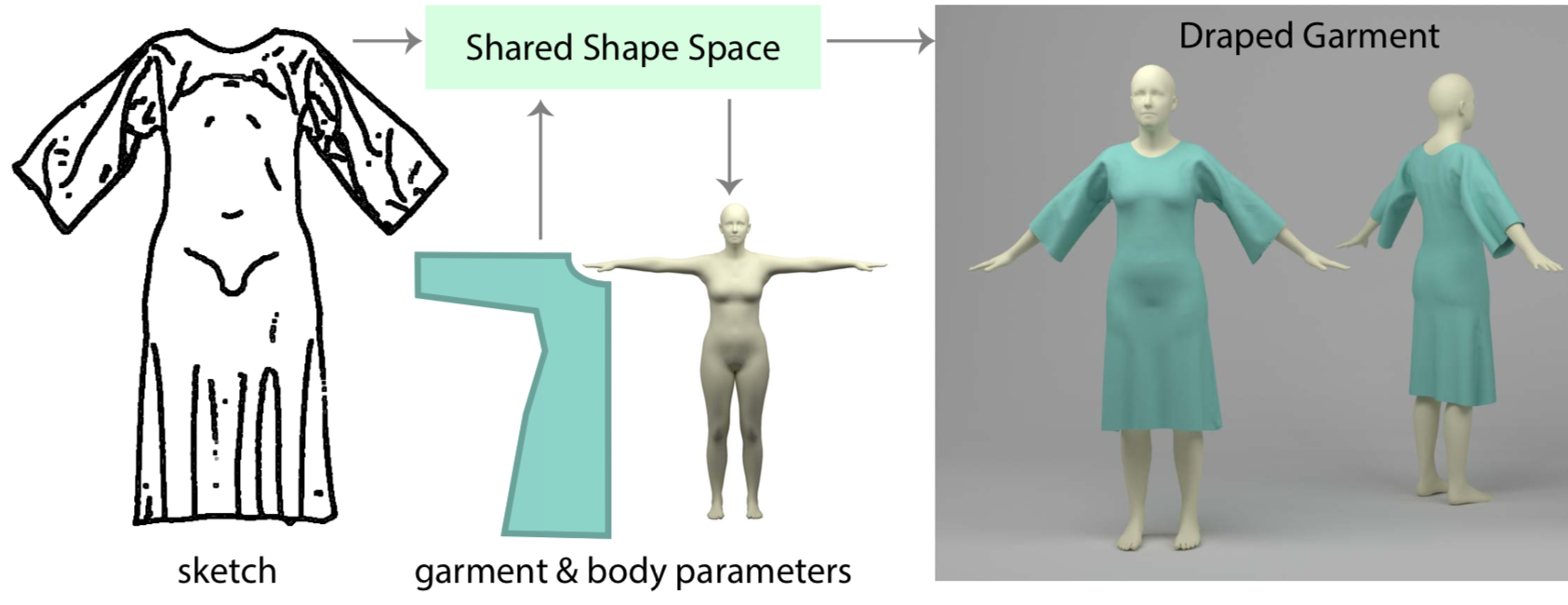

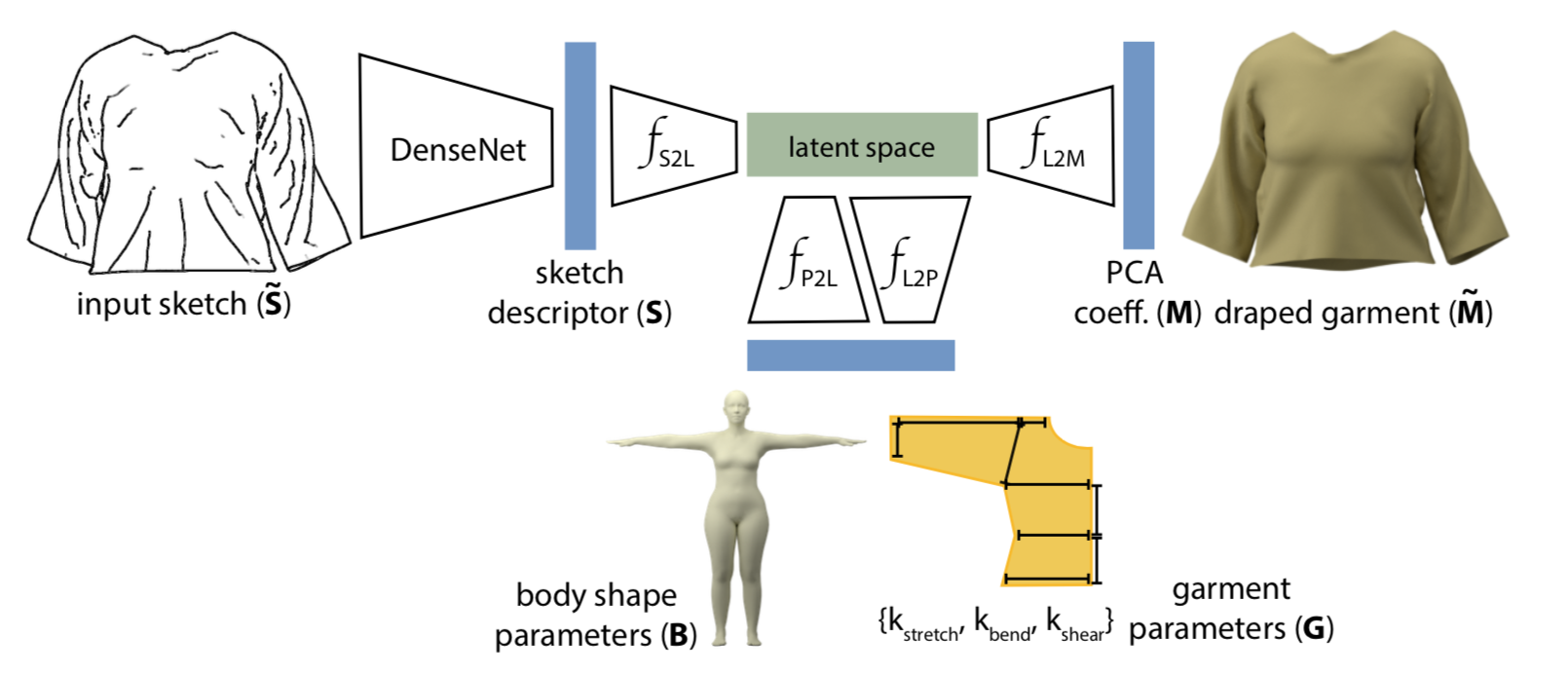

Garment design is complex, given the requirement to satisfy multiple specifications including target sketched fold patterns, 3D body shape, or 2D sewing patterns and/or textures. We learn a novel shared shape space spanning different input modalities that allows the designer to seamlessly work across the multiple domains. This enables to interactively design and edit garments without requiring access to expensive physical simulations at design time, and retarget the design to a range of body shapes.

Abstract

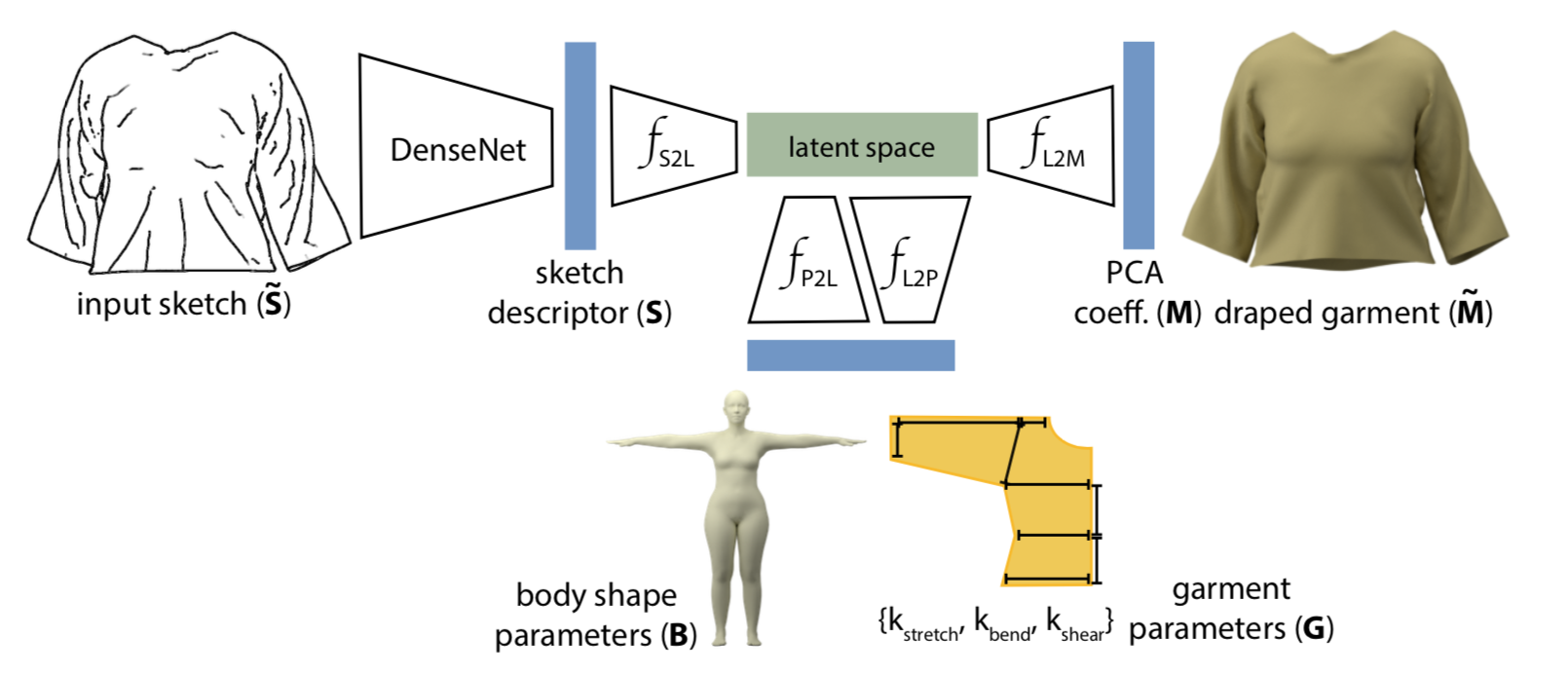

Designing real and virtual garments is becoming extremely demanding with rapidly changing fashion trends and increasing need for synthesizing realisticly dressed digital humans for various applications. This necessitates creating simple and effective workflows to facilitate authoring sewing patterns customized to garment and target body shapes to achieve desired looks. Traditional workflow involves a trial-and-error procedure wherein a mannequin is draped to judge the resultant folds and the sewing pattern iteratively adjusted until the desired look is achieved. This requires time and experience. Instead, we present a data-driven approach wherein the user directly indicates desired fold patterns simply by sketching while our system estimates corresponding garment and body shape parameters at interactive rates. The recovered parameters can then be further edited and the updated draped garment previewed. Technically, we achieve this via a novel shared shape space that allows the user to seamlessly specify desired characteristics across multimodal input without requiring to run garment simulation at design time. We evaluate our approach qualitatively via a user study and quantitatively against test datasets, and demonstrate how our system can generate a rich quality of on-body garments targeted for a range of body shapes while achieving desired fold characteristics.

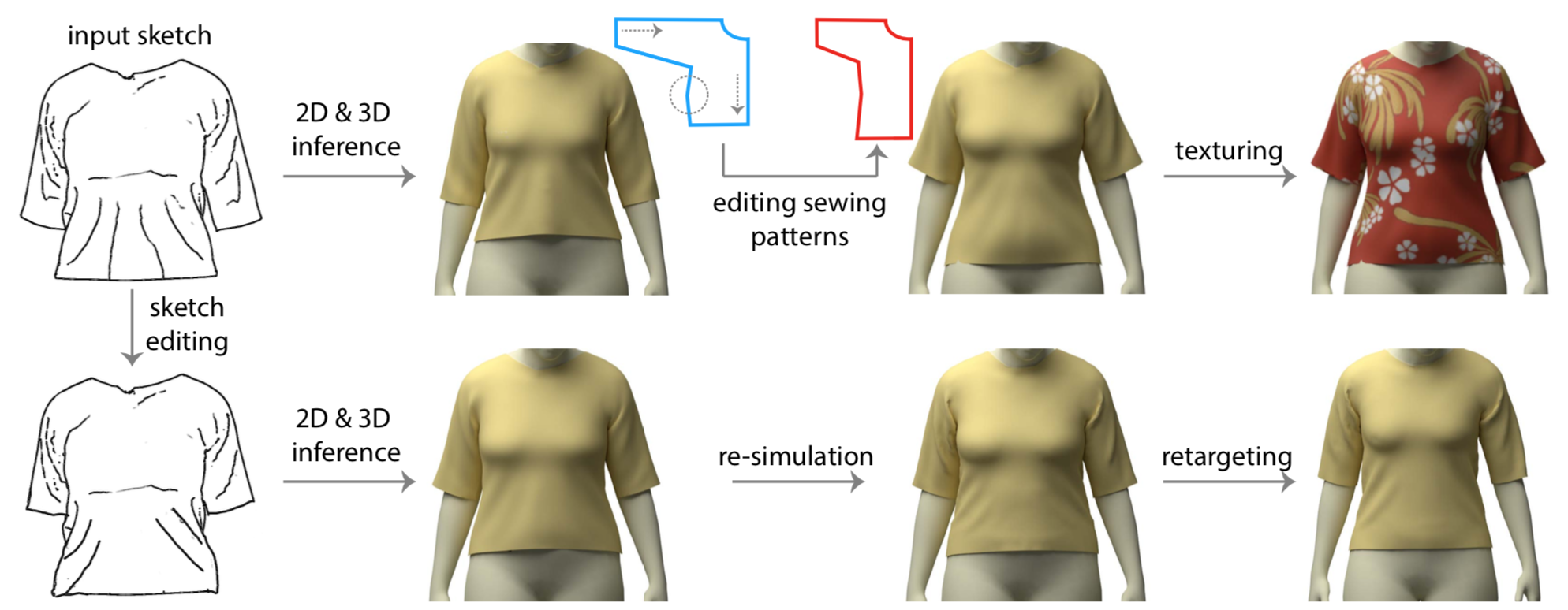

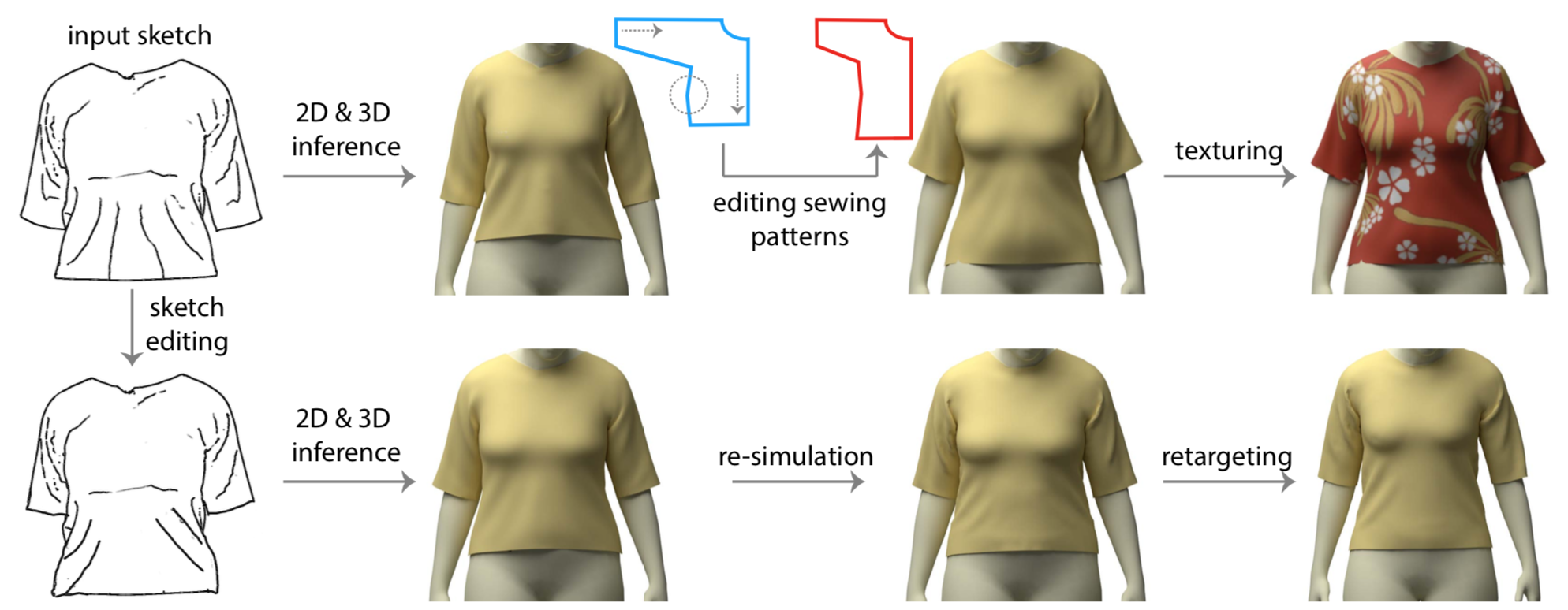

Given an input sketch,our network infers both the 2D garment sewing patterns (in blue)and the draped 3D garment mesh together with the underlying body shape. Edits in the 2D sewing patterns (e.g., shorter sleeves, longer shirt as shown in red) or the sketch (bottom row) are interactively mapped to updated 2D and 3D parameters. The 3D draped garment inferred by our network naturally comes with uv coordinates, and thus can be directly textured. The network predictions can be passed to a cloth simulator to generate the final garment geometry with fine details. Finally, the designed garment can be easily retargeted to different body shapes while preserving the original style (i.e., fold patterns, silhouette) of the design.

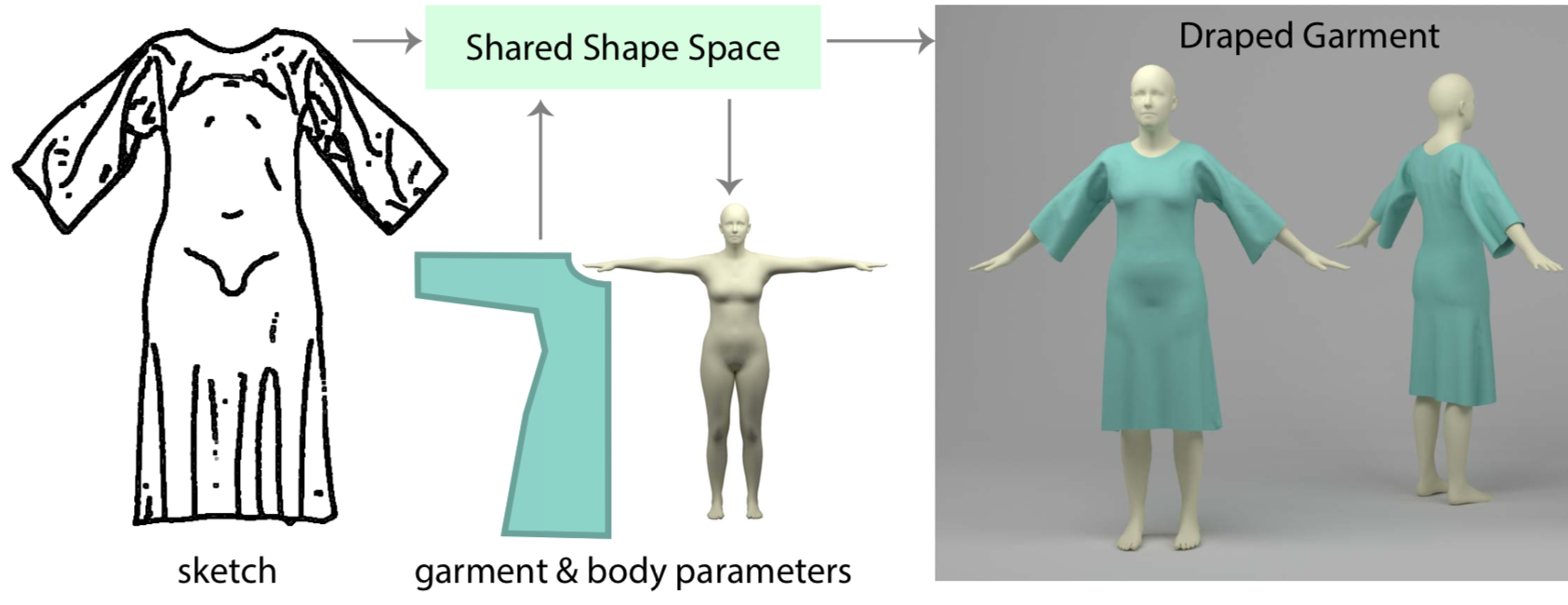

We learn a shared latent shape space between (i) 2D sketches, (ii) garment and body shape parameters, and (iii) draped garment shapes by jointly training multiple encoder-decoder networks.

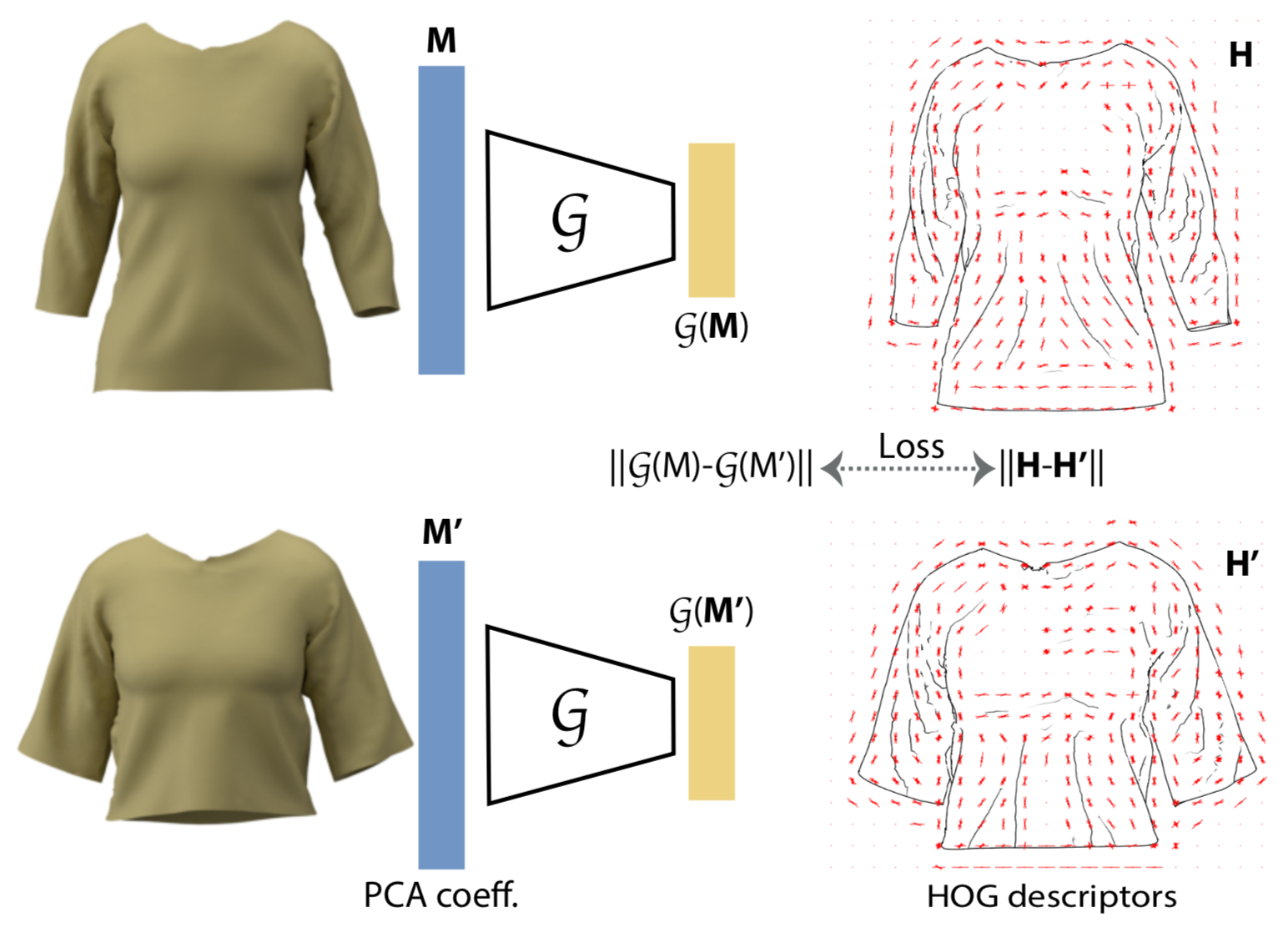

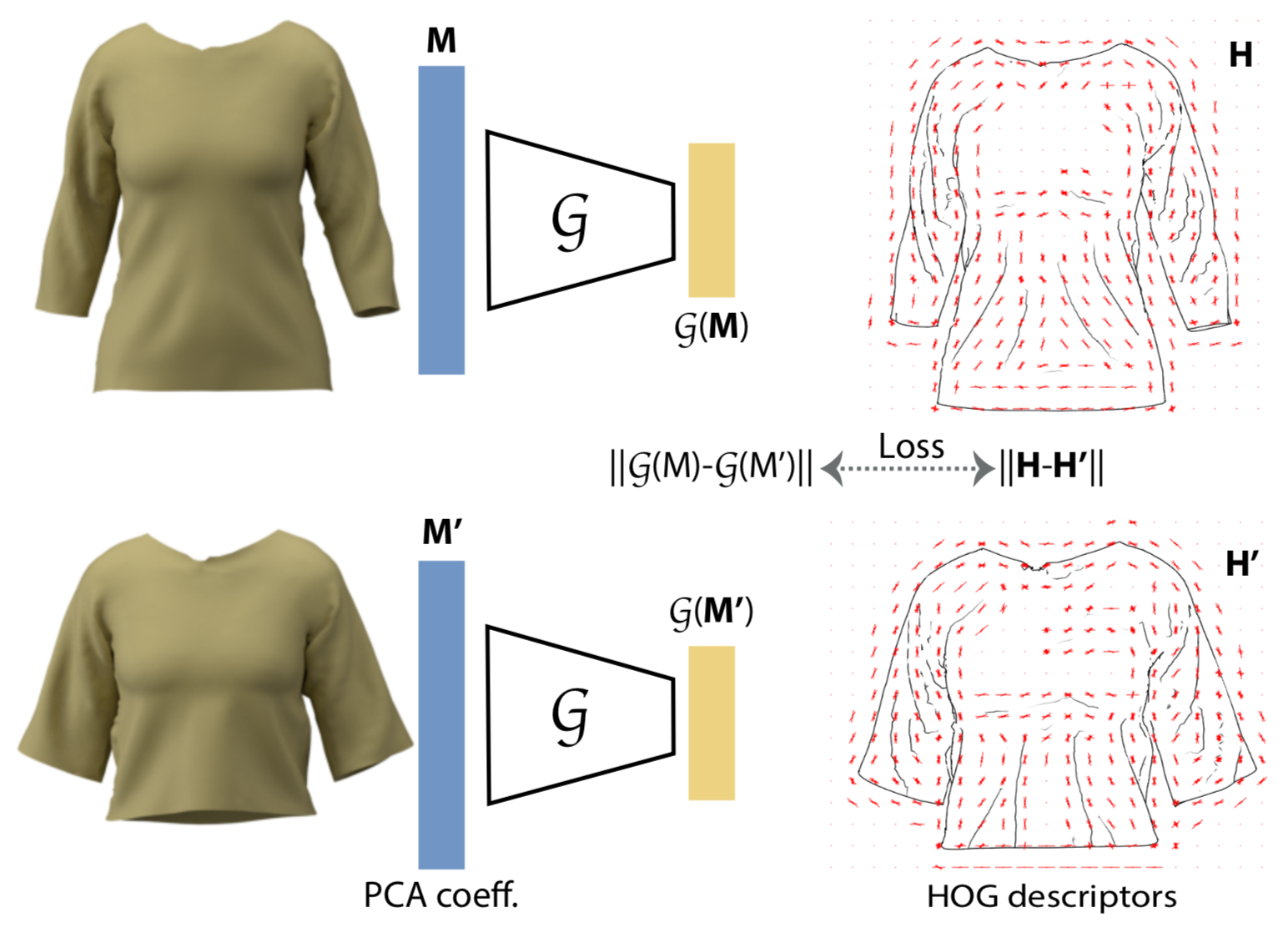

We train a Siamese network G that learns an embedding of draped garment shapes {M}. The distance between a pair of draped garments (M, M′) in this learned embedding space is similar to the distance between the HOG features of the corresponding sketches. Once trained, the loss function can be differentiated and used for retargeting optimization.

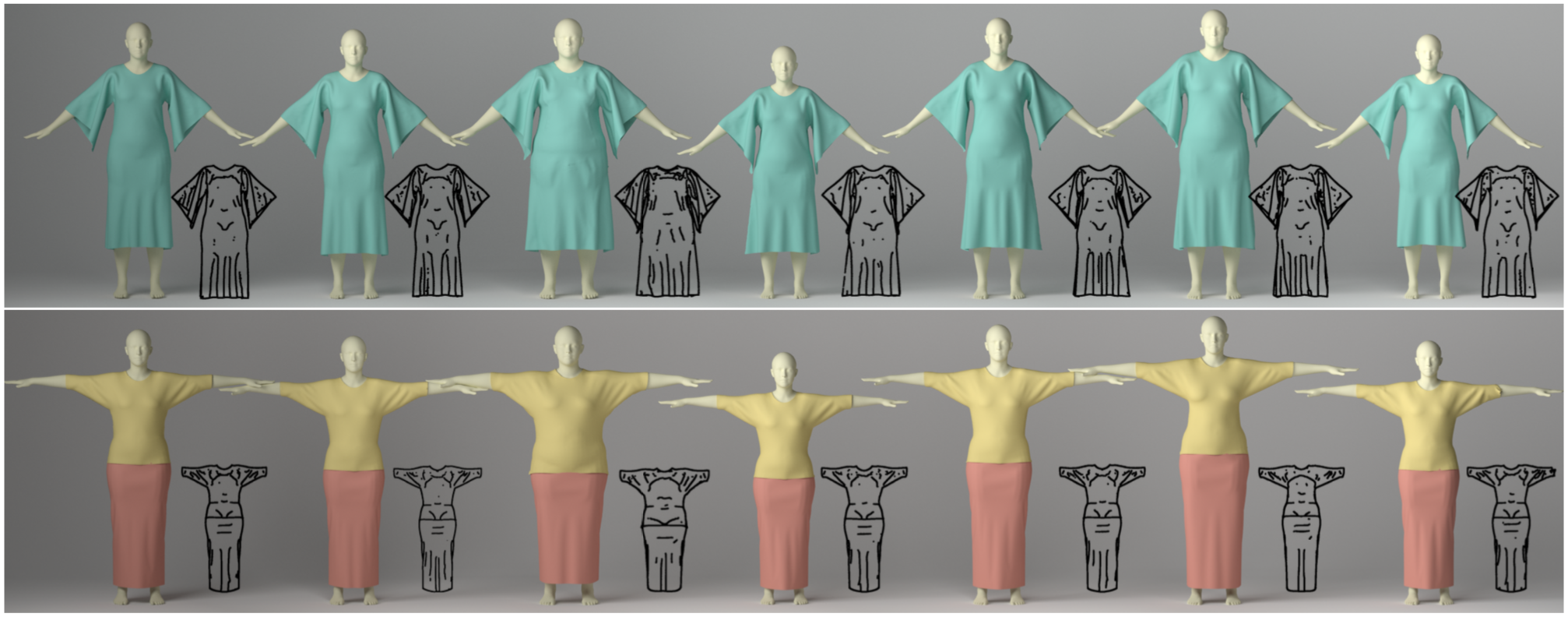

For each reference image, we ask users to draw the corresponding sketch that we provide as input to our method. Our method generates draped garments that closely resemble the reference images.

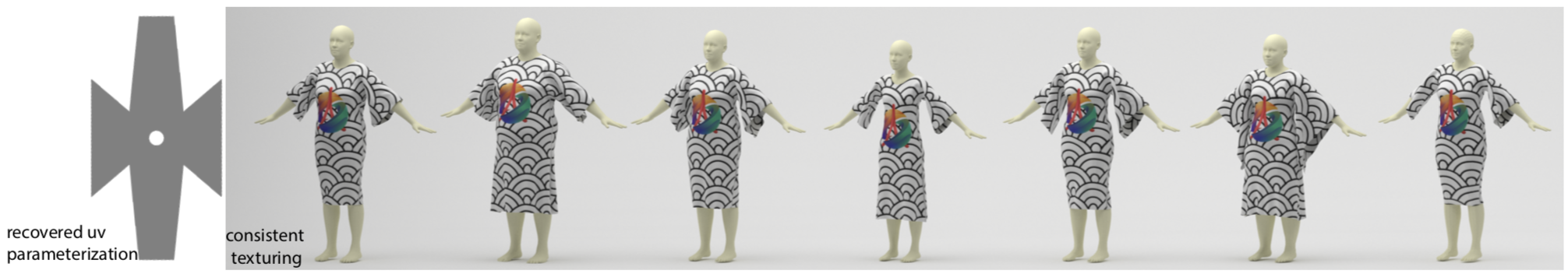

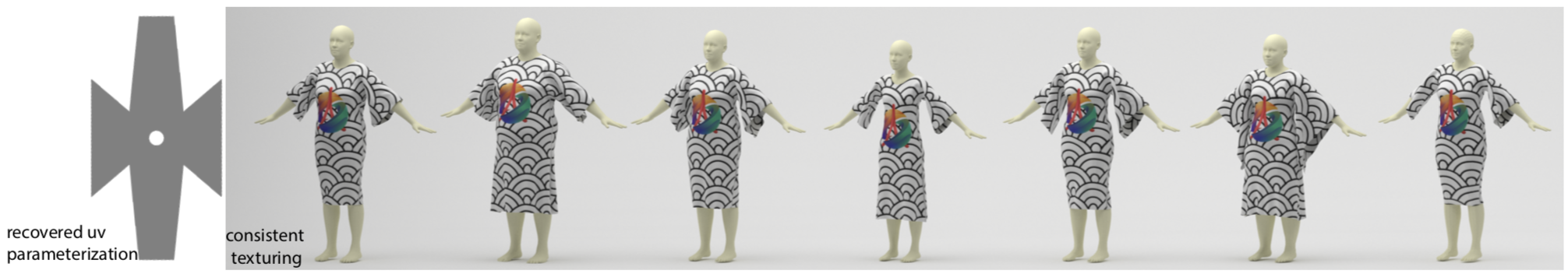

Our method generates consistent uv parameters across different instances of the same garment type. In this example, the alignment between the texture patterns can be best seen in the neck region.

Given two kimono designs shown on the left and the right, we interpolate between their corresponding descriptors in the shared latent space. For each interpolation sample, we show the 3D draped garments inferred by our network and the NPR rendering of the simulated mesh from the corresponding sewing parameters. Our shared latent space is continuously resulting in smooth in-between results.

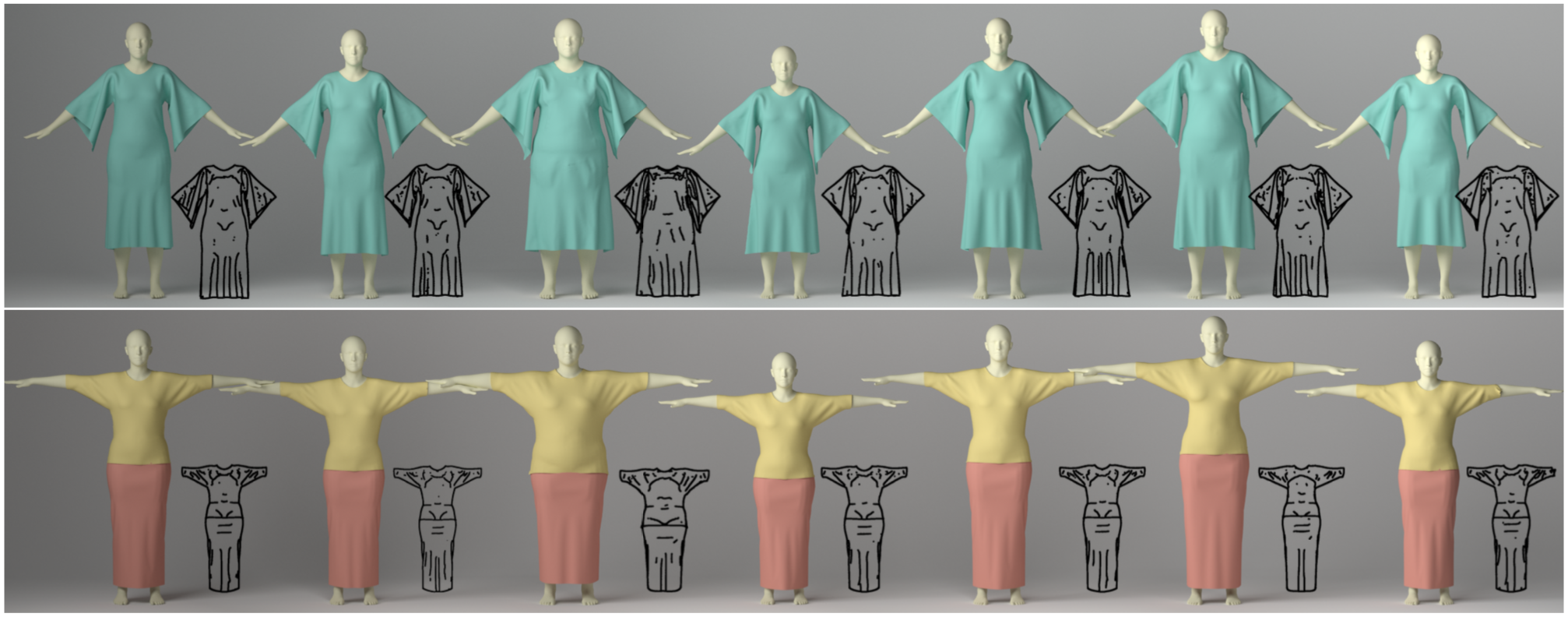

Given a reference garment style on the left, we retarget it to various body shapes. For each example, we also provide the NPR renderings of the draped garments.

Bibtex

@article{garmentdesign_Wang_SA18,

author = {Tuanfeng Y. Wang and Duygu Ceylan and Jovan Popovic and Niloy J. Mitra},

title = {Learning a Shared Shape Space for Multimodal Garment Design},

year = {2018},

journal = {ACM Trans. Graph.},

volume = {37},

number = {6},

pages = {1:1--1:14},

doi = {10.1145/3272127.3275074},

}

Acknowledgements

We thank the reviewers for their comments and suggestions for improving the paper. The authors would also like to thank Dr. Paul Guerrero for helping with user study. This work is in part supported by a Microsoft PhD fellowship program, a Rabin Ezra Trust Scholarship, a google faculty award, a ERC Starting Grant SmartGeometry (StG-2013-335373), and UK Engineering and Physical Sciences Research Council (grant EP/K023578/1).