Joint Material and Illumination Estimation from Photo Sets in the Wild

- Tuanfeng Y. Wang

- Tobias Ritschel

- Niloy J. Mitra

University College London

3DV 2018

Selected for Oral presentation

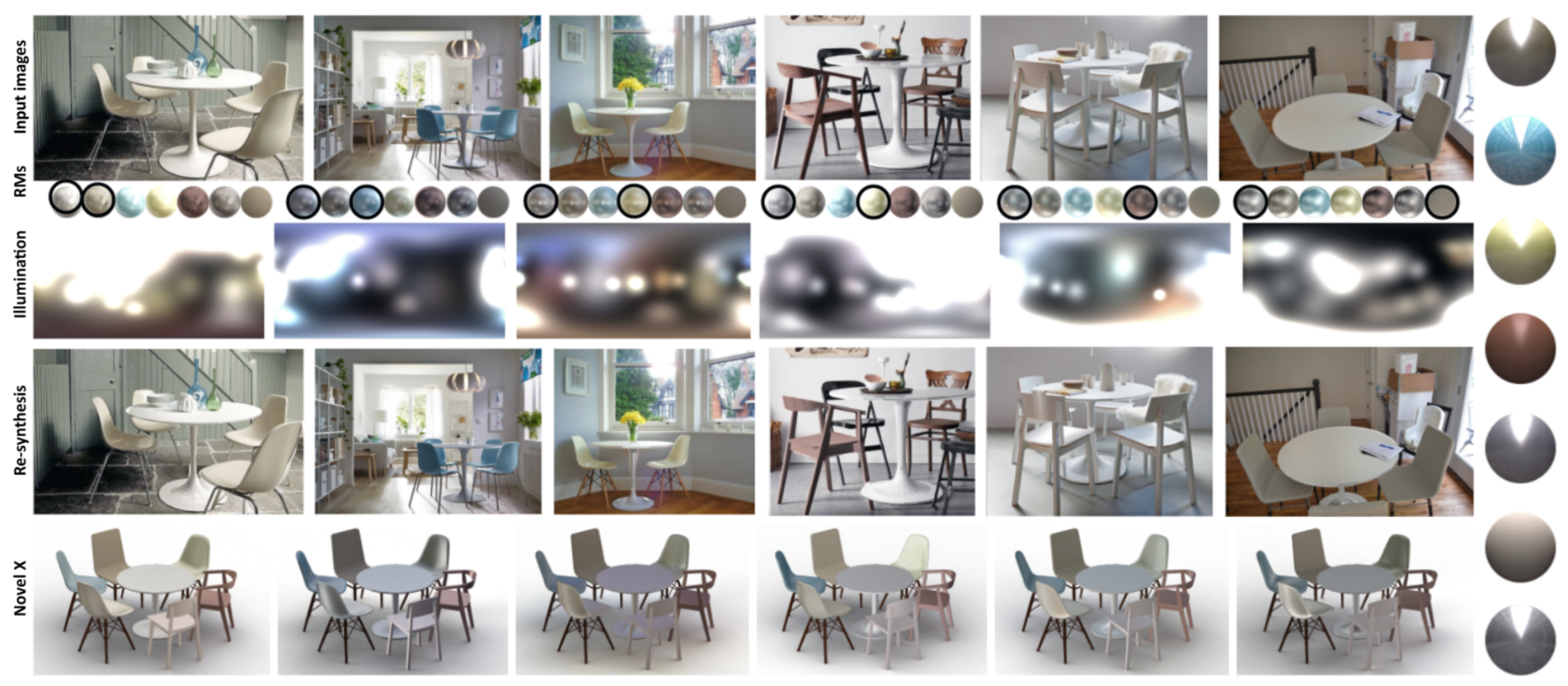

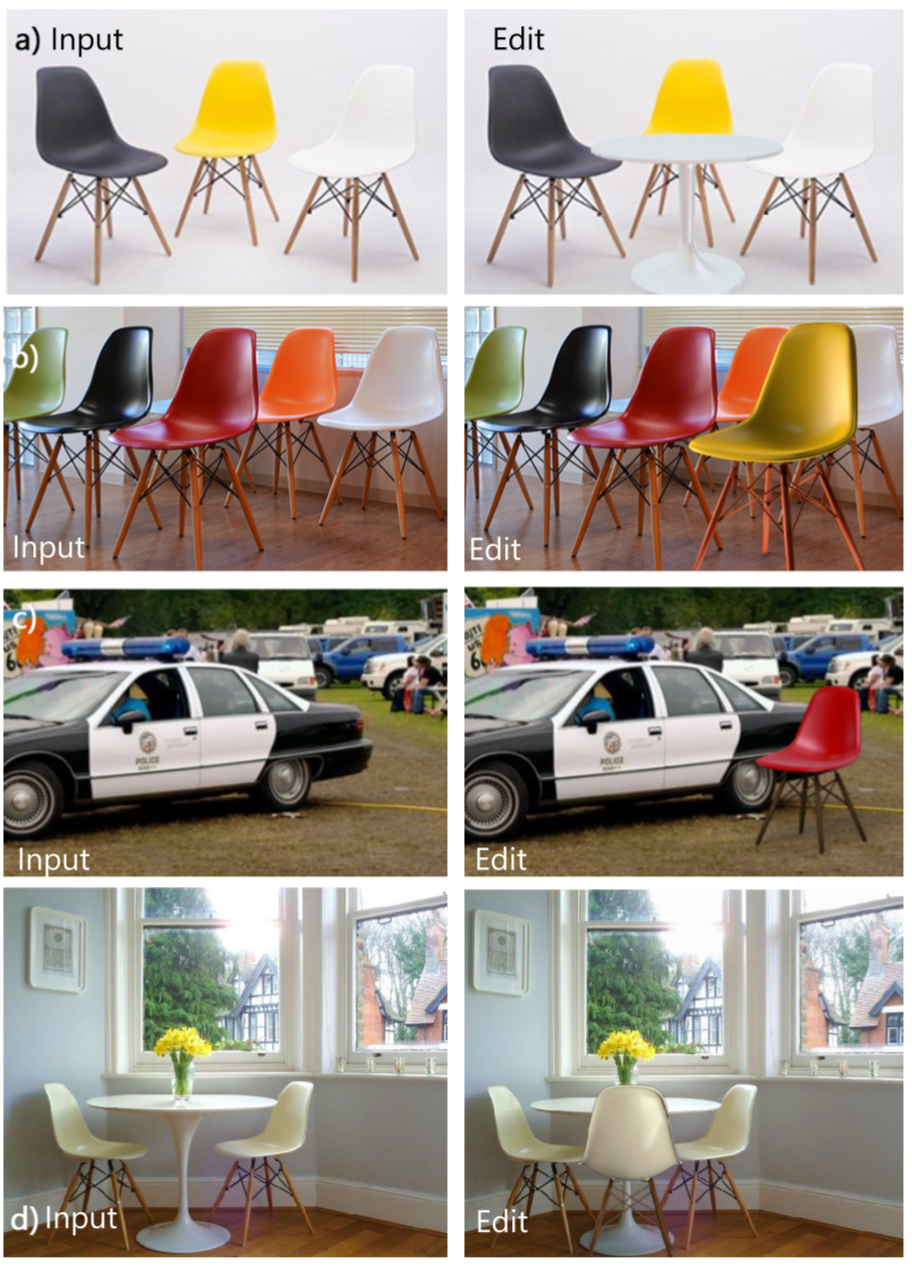

We factor a set of images (left) showing objects with different materials (red, yellow, black, white plastic) under different illumination into per-image illumination and per-object material (top right) that allows for novel-x applications such as changing view, illumination, material, or mixed illumination/material (red chair in the left-bottom imaged environment) (bottom right).

Abstract

Faithful manipulation of shape, material, and illumination in 2D Internet images would greatly benefit from a reliable factorization of appearance into material (i.e., diffuse and specular) and illumination (i.e., environment maps). In this work, we propose to make use of a set of photographs in order to jointly estimate the non-diffuse materials and sharp lighting in an uncontrolled setting. Our key observation is that seeing multiple instances of the same material under different illumination (i.e., environment), and different materials under the same illumination provide valuable constraints that can be exploited to yield a high-quality solution (i.e., specular materials and environment illumination) for all the observed materials and environments. Technically, we enable this by a novel scalable formulation using parametric mixture models that allows for simultaneous estimation of all materials and illumination directly from a set of (uncontrolled) Internet images. At the core is an optimization that uses two neural networks trained on synthetic images to predict good gradients in parametric space given observation of reflected light. We evaluate our method on a range of synthetic and real examples to generate high-quality estimates, qualitatively compare our results against state-of-the-art alternatives via a user study. Code and data are available on this website.

Overview

As input, we require a set of photographs of shared objects with their respective masks. In particular, we assume the materials segmentation to be consistent across images. As output, our algorithm produces a parametric mixture model (PMM) representation of illumination (that can be converted into a common environment map image) for each photograph and the reflectance parameters for every segmented material. We proceed in three steps.

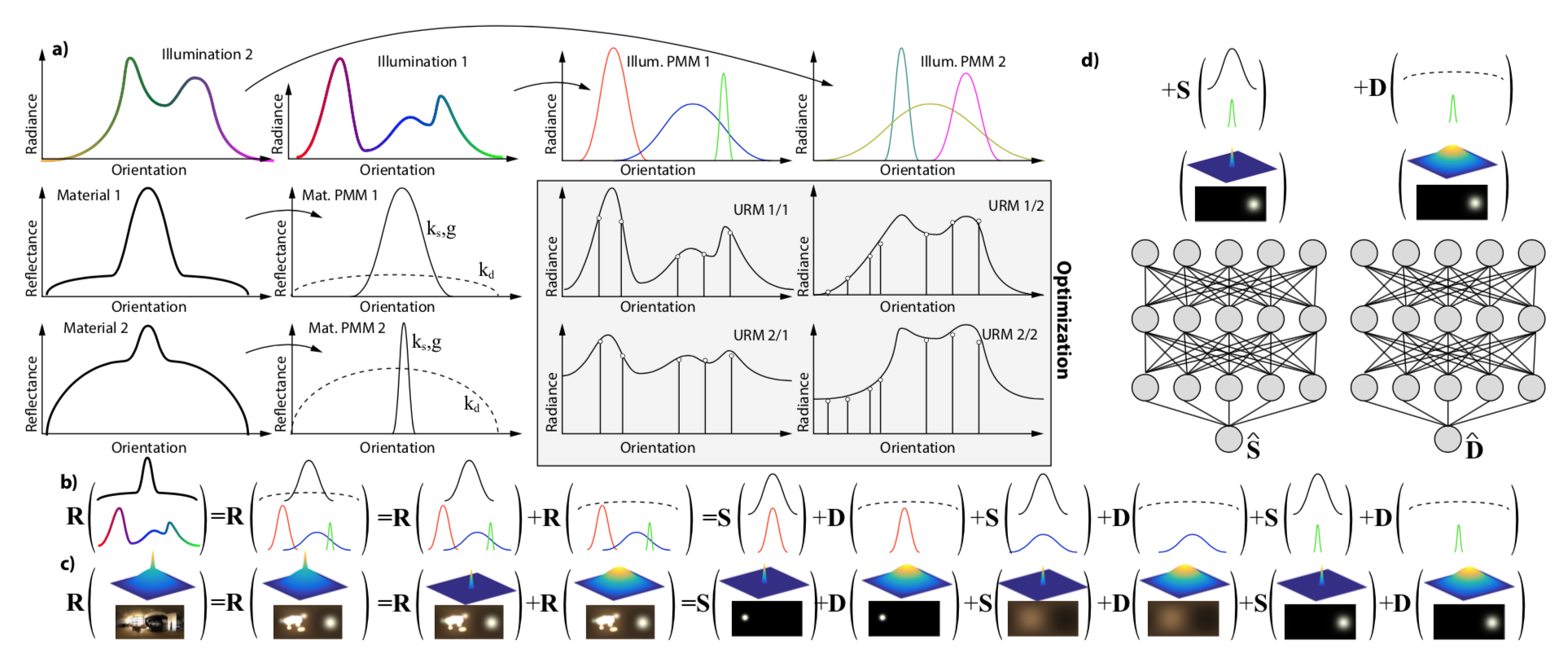

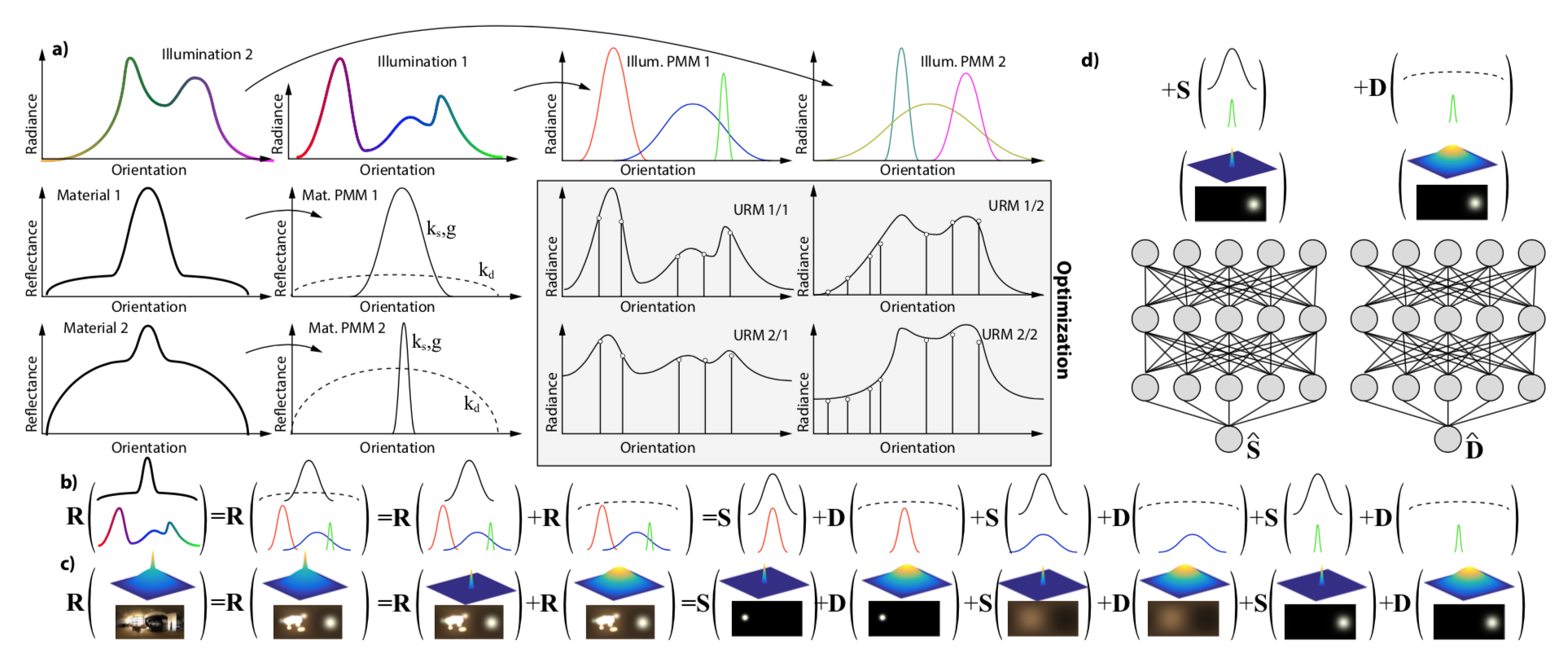

The three main ideas to enable large-scale optimization: (a) approximating illumination as parametric mixture models and the

BRDF as a sum of a diffuse and a specular component; (b, c) expressing reflection as a sum of diffuse and specular reflections of individual

lobes; and (d) approximating derivative of diffuse and specular reflection of ISGs using corresponding neural nets.

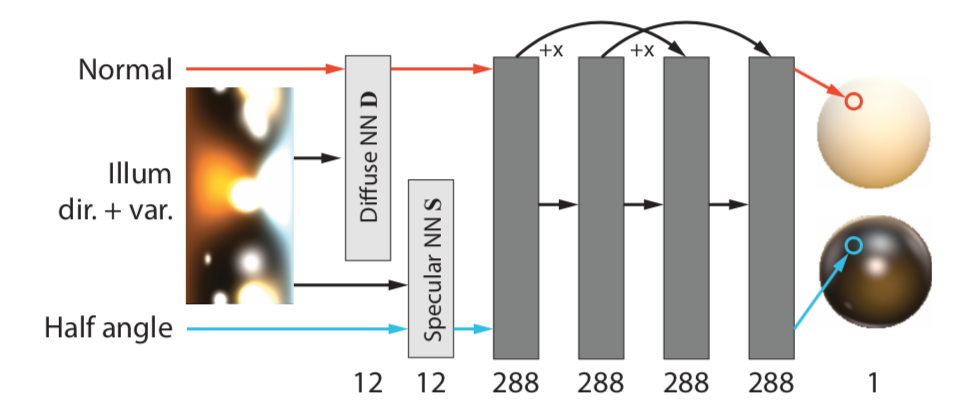

In order to solve this inverse rendering problem, we train a Neural Network to approximate rendering.

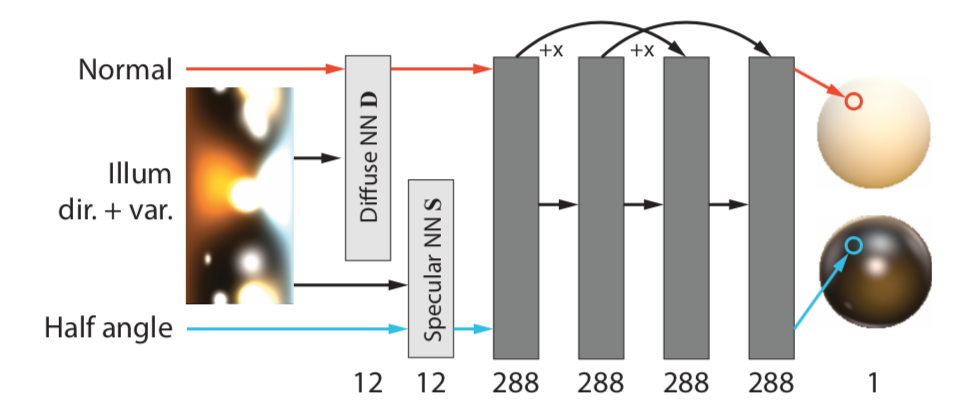

Our diffuse (orange) and specular (blue) neural network architecture, that consumes either normal and a single illumination lobe, or half-angle (left) and a lobe to produce a color (right).

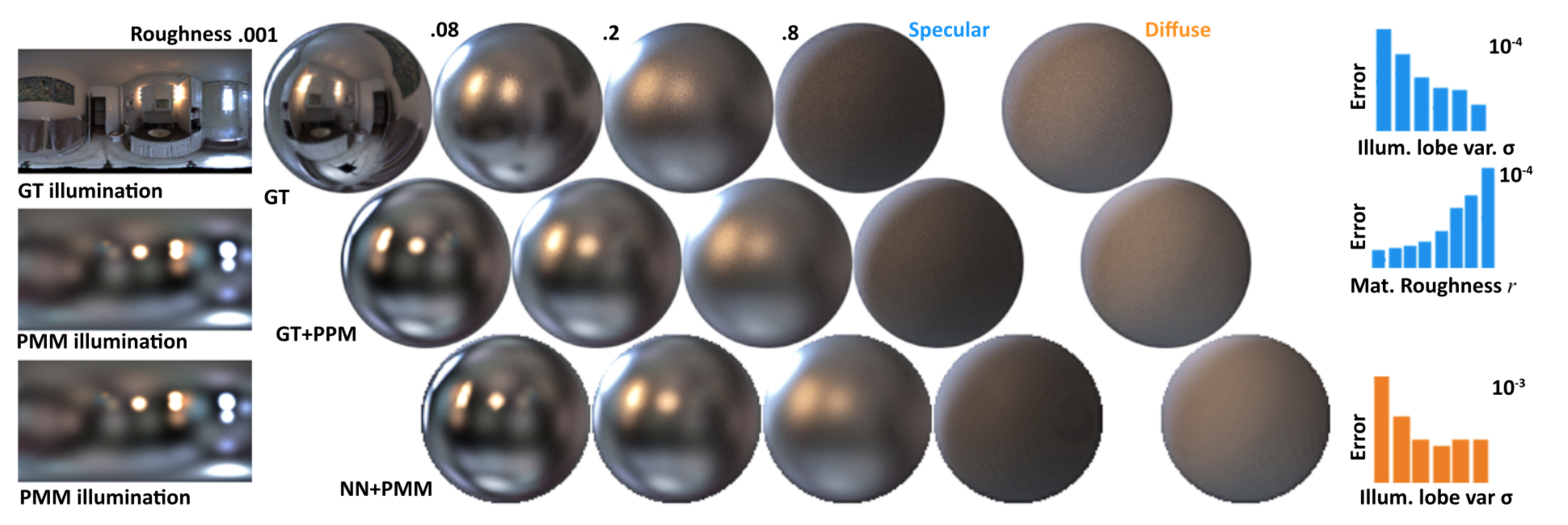

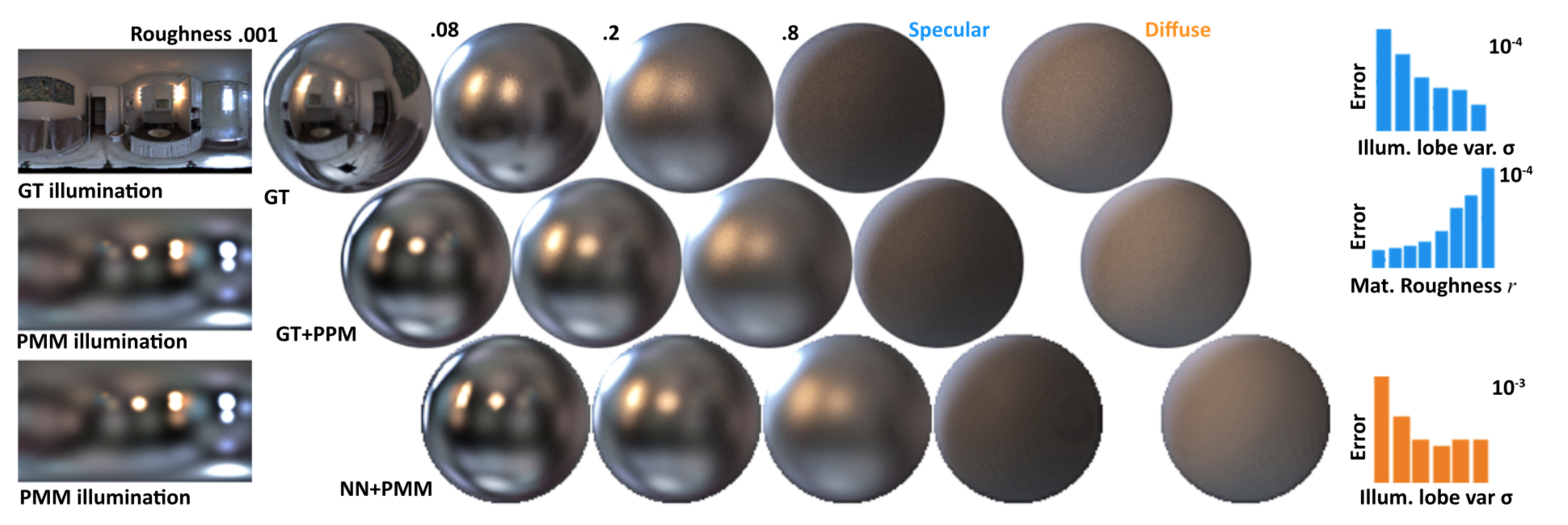

Evaluation of the neural network. The first row shows GT renderings with a GT envmap. The second row shows again GT

rendering, but using the GMM fit to the envmap. This is an upper bound on the NN quality, as it works on the GMM representation. The

third row shows the NN result. In the horizontal direction, specular results of increasing roughness are followed by the diffuse result in the

rightmost column. The plots on the right below show the error distribution as a function of different parameters.

Bibtex

@inproceedings{jointMaterialIlluminationEst_Wang_3DV18,

Author = {Tuanfeng Y. Wang and Tobias Ritschel and Niloy J. Mitra},

booktitle = {Proceedings of International Conference on 3DVision ({3DV})},

note = {selected for oral presentation},

Title = {Joint Material and Illumination Estimation from Photo Sets in the Wild},

Year = {2018}}

Acknowledgements

This work is in part supported by a Microsoft PhD fellowship program, a Rabin Ezra Trust Scholarship, ERC Starting Grant SmartGeometry (StG-2013-335373), and UK Engineering and Physical Sciences Research Council (grant EP/K023578/1).