StructEdit: Learning Structural Shape Variations

Kaichun Mo1*

Paul Guerrero2*

Li Yi1

Hao Su3

Peter Wonka4

Niloy J. Mitra2,5

Leonidas J.Guibas1,6

1Stanford University

2Adobe Research

3University of California San Diego

4King Abdullah University of Science and Technology (KAUST)

5University College London

6Facebook AI Research

*joint first authors

CVPR 2020

Abstract

Learning to encode differences in the geometry and (topological) structure of the shapes of ordinary objects is key to generating semantically plausible variations of a given shape, transferring edits from one shape to another, and many other applications in 3D content creation. The common approach of encoding shapes as points in a high-dimensional latent feature space suggests treating shape differences as vectors in that space. Instead, we treat shape differences as primary objects in their own right and propose to encode them in their own latent space. In a setting where the shapes themselves are encoded in terms of fine-grained part hierarchies, we demonstrate that a separate encoding of shape deltas or differences provides a principled way to deal with inhomogeneities in the shape space due to different combinatorial part structures, while also allowing for compactness in the representation, as well as edit abstraction and transfer. Our approach is based on a conditional variational autoencoder for encoding and decoding shape deltas, conditioned on a source shape. We demonstrate the effectiveness and robustness of our approach in multiple shape modification and generation tasks, and provide comparison and ablation studies on the PartNet dataset, one of the largest publicly available 3D datasets.

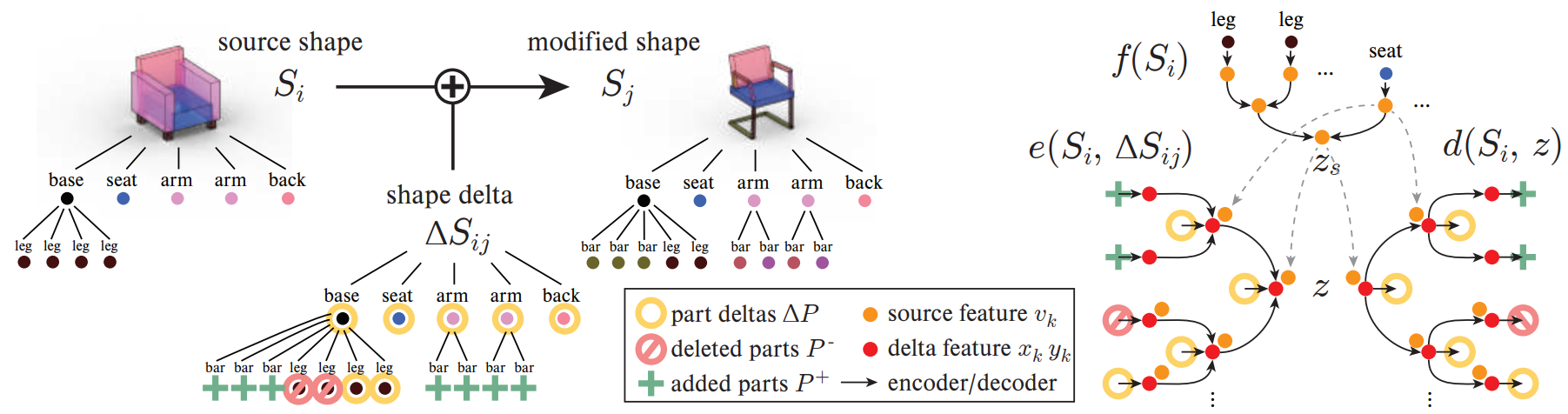

Shape Deltas and Network Architecture

On the left, we show a source shape and a modified shape. Both are represented as hierarchical assemblies of individual parts, where each part is defined by a bounding box and a semantic category (see colors). The shape delta describes the transformation of the source into the modified shape with three types of components: part deltas, deleted parts, and added parts. We learn a distribution of these deltas, conditioned on the source shape, using the conditional VAE illustrated on the right side. Shape deltas are encoded and decoded recursively, following their tree structure, yielding one feature per subtree (red circles). Conditioning on the source shape is implemented by recursively encoding the source shape, and feeding the resulting subtree features (orange circles) to the corresponding parts of the encoder and decoder.

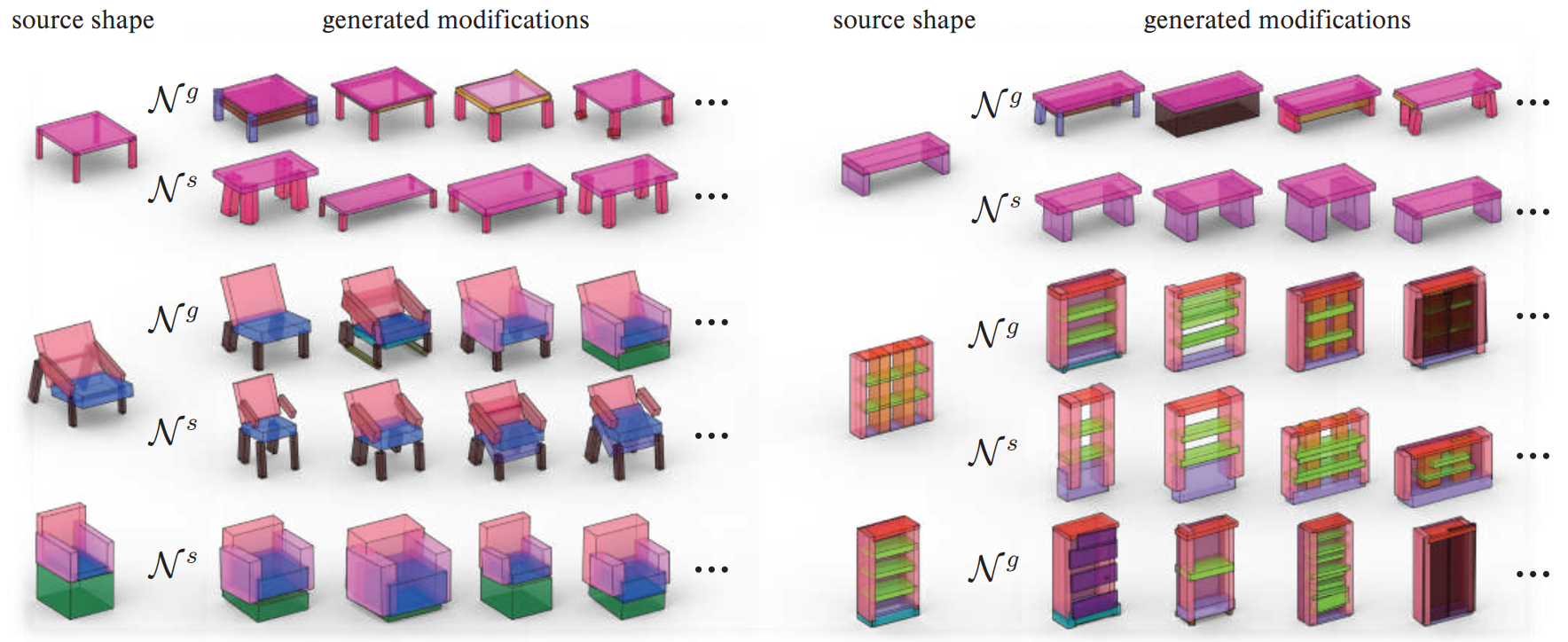

Edit Generation

Adding random edits to the source shape on the left allows us to explore a large range of variations. For neighborhoods Ng, we obtain structural variations, while for Ns we create geometric variation. We show shapes in all categories decoded from random latent vectors, including shapes with bounding box geometry, and shapes with point cloud geometry. Parts are colored according to semantics, see the Supplementary for the full semantic hierarchy for each category. Since we explicitly encode shape structure in our latent representation, the generated shapes have a large variety of different structures.

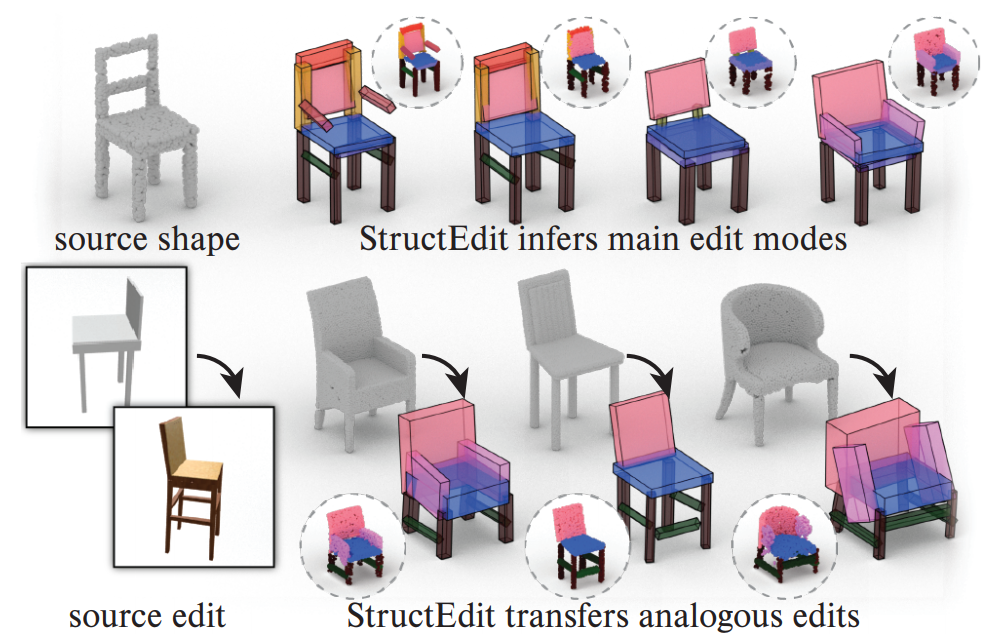

Edit Transfer

We transfer the edit of the source shape in the top row to analogous edits of the source shapes in the remaining rows, using StructureNet (SN) and StructEdit (SE). Our explicit encoding of edits results in higher consistency.

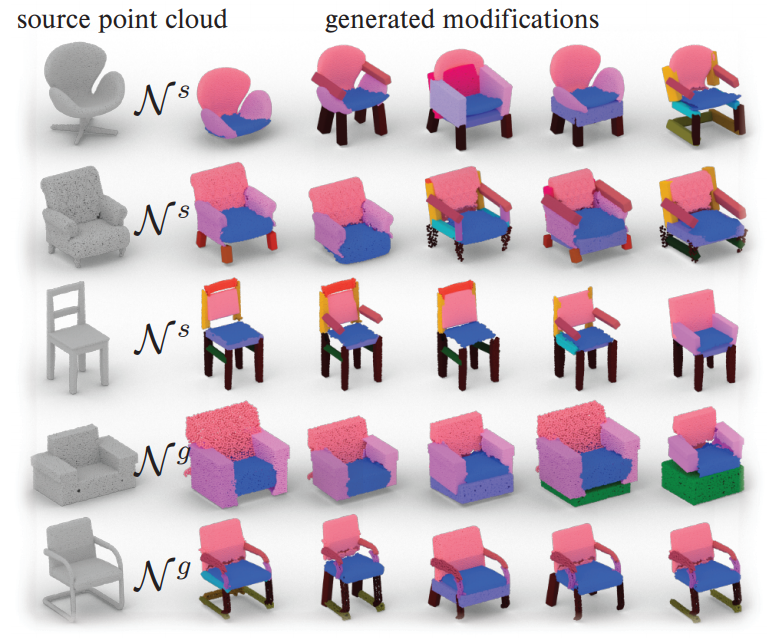

Exploring Point Cloud Variations

Edits for point clouds can be created by transforming a shape into a point cloud, applying the edit, and passing the changes back to the point cloud.

Cross-Modal Edit Analogy

We can transfer edits between modalities by transforming different shape modalities to our structured shape representation.

Bibtex

@article{MoGuerreroEtAl:StructEdit:Arxiv:2019,

title={StructEdit: Learning Structural Shape Variations},

author={Mo, Kaichun and Guerrero, Paul and Yi, Li and Su, Hao and Wonka, Peter and Mitra, Niloy and Guibas, Leonidas},

journal={arXiv preprint arXiv:1908.00575},

year={2019}

}

Acknowledgements

This research was supported by NSF grant CHS-1528025, a Vannevar Bush Faculty Fellowship, KAUST Award No. OSR-CRG2017-3426, an ERC Starting Grant (SmartGeometry StG-2013-335373), ERC PoC Grant (SemanticCity), Google Faculty Awards, Google PhD Fellowships, Royal Society Advanced Newton Fellowship, and gifts from the Adobe, Autodesk, Google Corporations, and the Dassault Foundation.