Abstract

Automatic estimation of salient object regions across images, without any prior assumption or knowledge of the contents of the corresponding scenes, enhances many computer vision and computer graphics applications. We introduce a regional contrast based salient object extraction algorithm, which simultaneously evaluates global contrast differences and spatial weighted coherence scores. The proposed algorithm is simple, efficient, naturally multi-scale, and produces full-resolution, high-quality saliency maps. These saliency maps are further used to initialize a novel iterative version of GrabCut for high quality salient object segmentation. We extensively evaluated our algorithm using traditional salient object detection datasets, as well as a more challenging Internet image dataset. Our experimental results demonstrate that our algorithm consistently outperforms existing salient object detection and segmentation methods, yielding higher precision and better recall rates. We also show that our algorithm can be used to efficiently extract salient object masks from Internet images, enabling effective sketch-based image retrieval (SBIR) via simple shape comparisons. Despite such noisy internet images, where the saliency regions are ambiguous, our saliency guided image retrieval achieves a superior retrieval rate compared with state-of-the-art SBIR methods, and additionally provides important target object region information.

Comparisons with state of the art methods

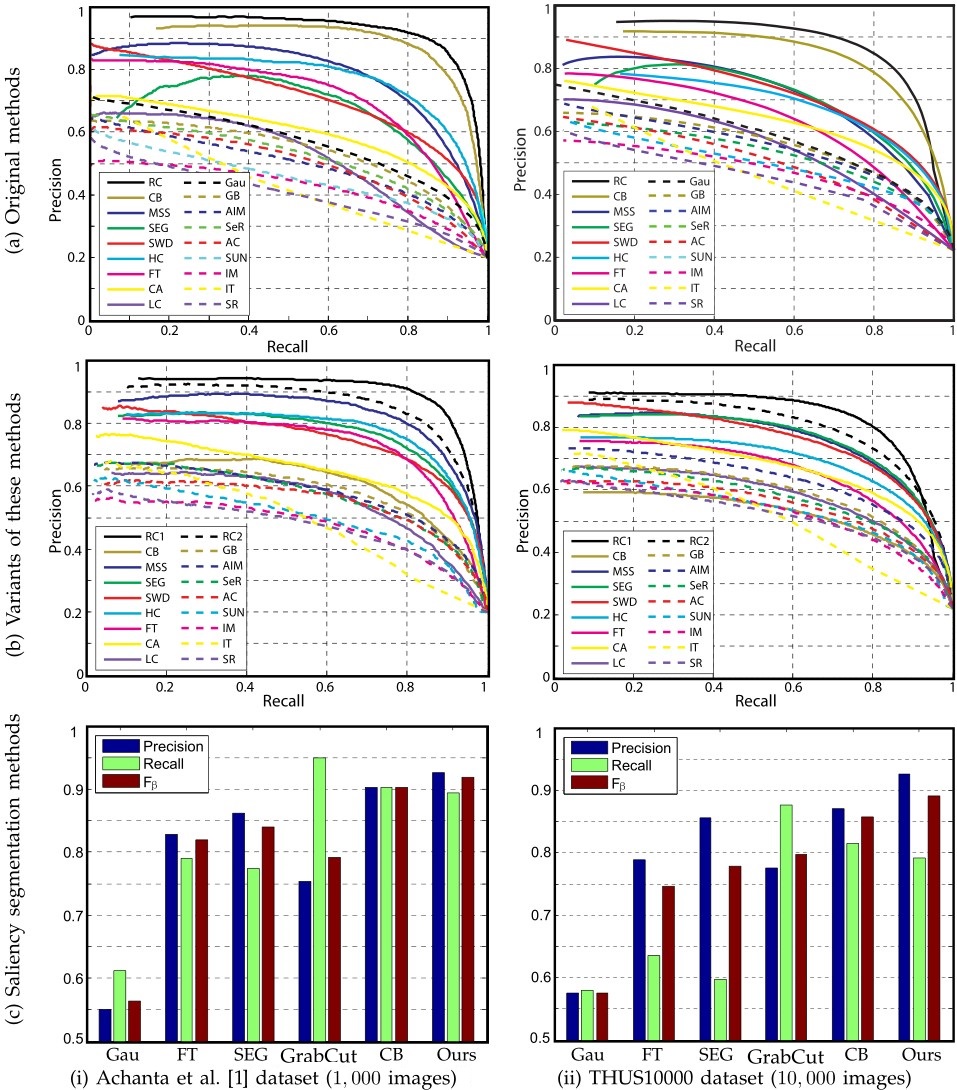

Figure 2: Statistical comparison results of (a) different saliency region detection methods, (b) their variants, and (c) object of interest region segmentation methods, using largest public available dataset (i) and (ii) our MSRA10K dataset (to be made public available). We compare our HC method and RC method with 15 state of art methods, including FT [1], AIM [2], MSS [3], SEG [4], SeR [5], SUN [6], SWD [7], IM [8], IT [9], GB [10], SR [11], CA [12], LC [13], AC [14], and CB [15]. We also take simple variable-size Gaussian model ‘Gau’ and GrabCut method as a baseline. (Please see our paper for detailed explaintions)

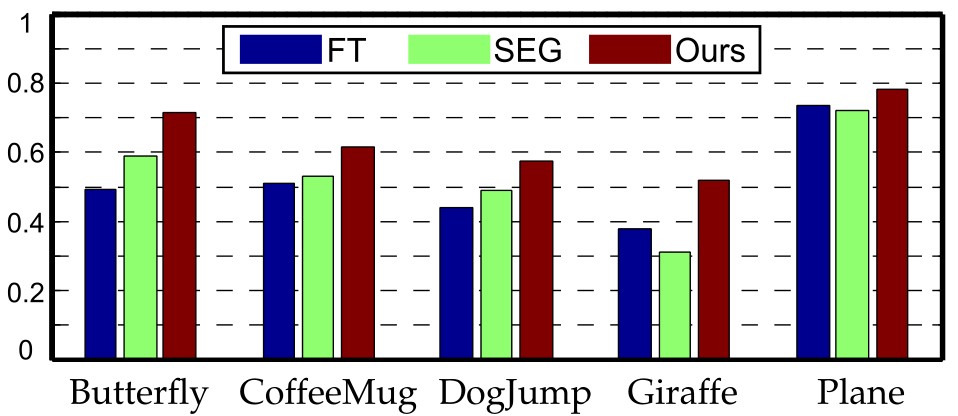

Figure 3: Comparison of average Fβ for different saliency segmentation methods: FT [1], SEG [4], and ours, on THUR15K dataset, which is composed by non-selected internet images.

Acknowledgement

We thank the reviewers for their comments and suggestions for improving the paper. This work was supported in part by an UCL Impact award, the ERC Starting Grant SmartGeometry (StG-2013- 335373), NSFC (No. 61402402), and gifts from Adobe Research.

Bibtex

@article{ChengPAMI,

author = {Ming-Ming Cheng and Niloy J. Mitra and Xiaolei Huang and Philip H. S. Torr and Shi-Min Hu},

title = {Global Contrast based Salient Region Detection},

year = {2014},

journal= {IEEE TPAMI},

doi = {10.1109/TPAMI.2014.2345401},

}