Relationship Templates for Creating Scene Variations

- Xi Zhao1

- Ruizhen Hu2

- Paul Guerrero3

- Niloy J. Mitra3

- Taku Komura4

1 Xi'an Jiaotong University 2 Shenzhen University 3 University College London 4 Edinburgh University

SIGGRAPH ASIA 2016

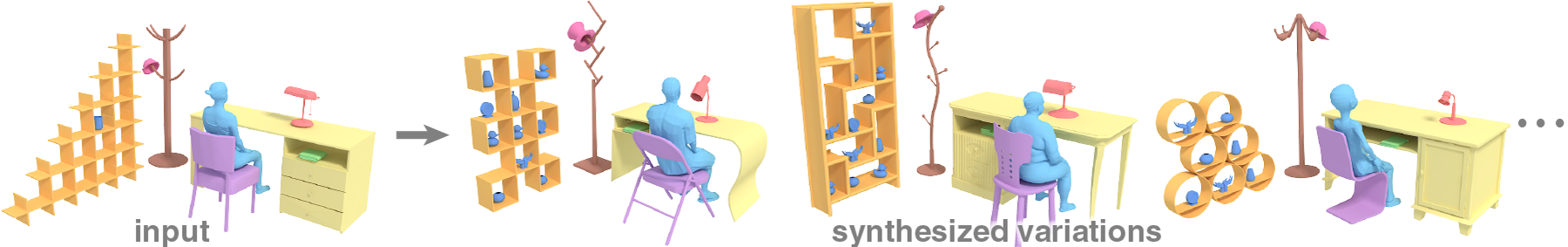

We propose a novel example-based scene synthesis approach based on how objects interact with each other in an example scene. The method handles complex relationships such as `hooked-on,' `tucked-under,' etc. and does not rely on classification or labeling of input objects.

Abstract

We propose a novel example-based approach to synthesize scenes with complex relations, e.g., when one object is ‘hooked,’ ‘surrounded,’ ‘contained’ or ‘tucked into’ another object. Existing relationship descriptors used in automatic scene synthesis methods are based on contacts or relative vectors connecting the object centers. Such descriptors do not fully capture the geometry of spatial interactions, and therefore cannot describe complex relationships. Our idea is to enrich the description of spatial relations between object surfaces by encoding the geometry of the open space around objects, and use this as a template for fitting novel objects. To this end, we introduce relationship templates as descriptors of complex relationships; they are computed from an example scene and combine the interaction bisector surface (IBS) with a novel feature called the space coverage feature (SCF), which encodes the open space in the frequency domain. New variations of a scene can be synthesized efficiently by fitting novel objects to the template. Our method greatly enhances existing automatic scene synthesis approaches by allowing them to handle complex relationships, as validated by our user studies. The proposed method generalizes well, as it can form complex relationships with objects that have a topology and geometry very different from the example scene.

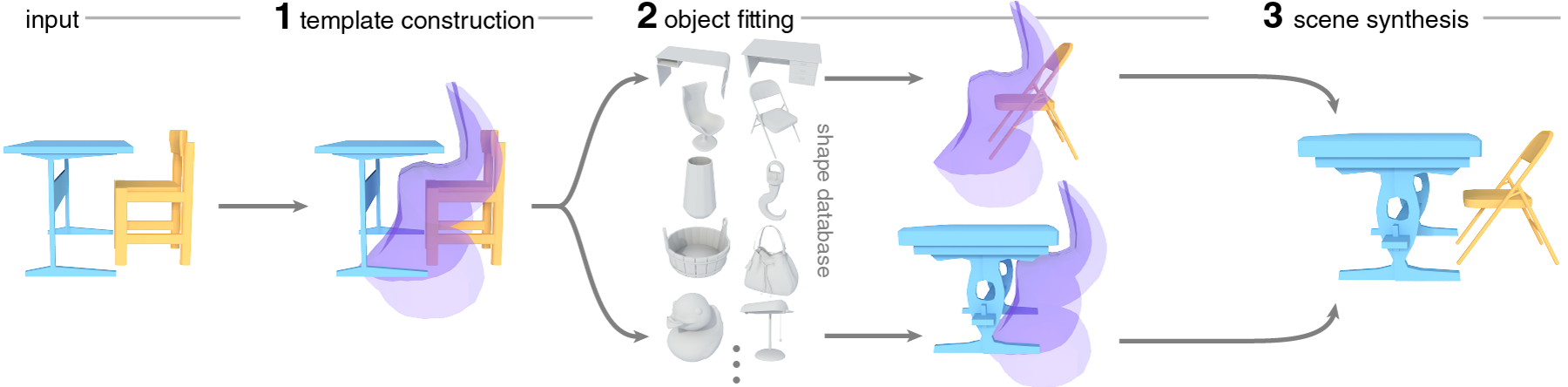

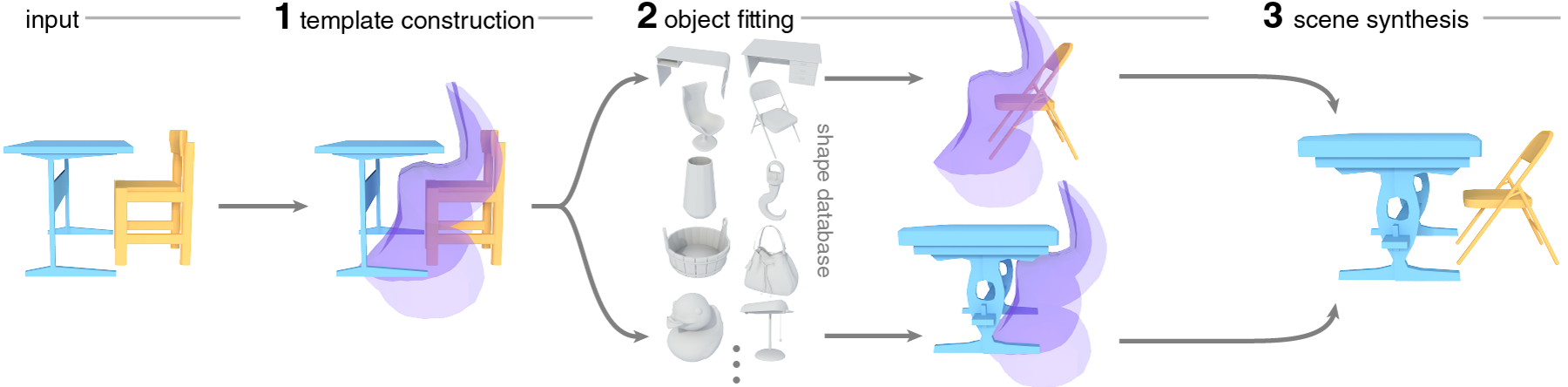

Overview

Overview of our synthesis of object pair variations. Given two example objects, we compute a relationship template that describes the geometric relationship between the two objects, shown in transparent blue. We can then fit objects from a database independently to each side of the template, guaranteeing that any fitted object pair will be in a relationship similar to the exemplar.

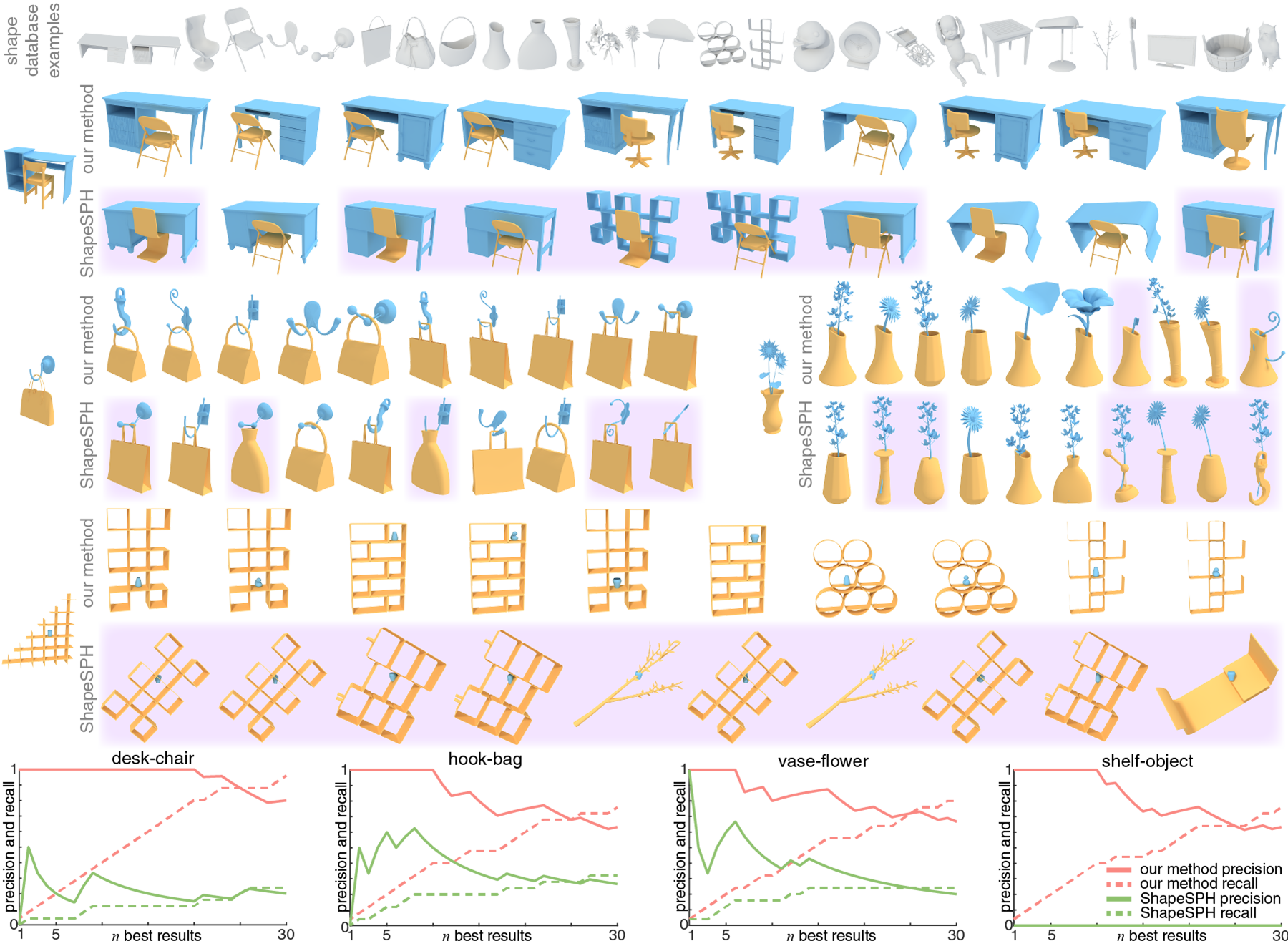

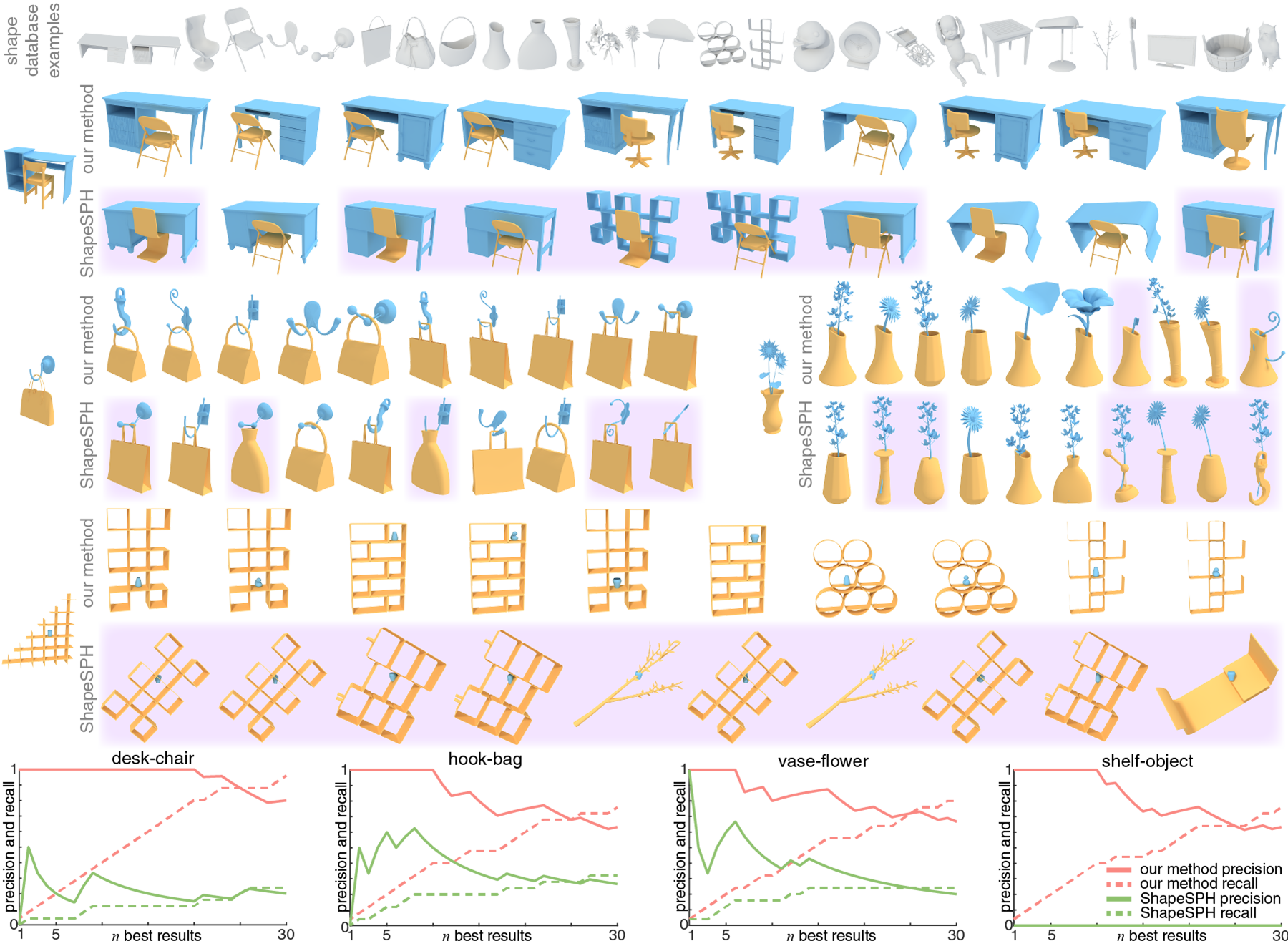

Pairwise Synthesis Results

We provide examples of synthesized object pairs in four different types of complex relationships. We compare to traditional shape matching using ShapeSPH [Funkhauser et al. 2004] as a baseline. Models are taken from a mixed shape database, a few examples are shown in the top row. Below, we show the four relationship exemplars on the left, followed on the right by the 10 best results of both our method and the baseline. Incorrect results have a purple background. In the bottom row, we provide precision and recall for up to the 30 best results of both methods.

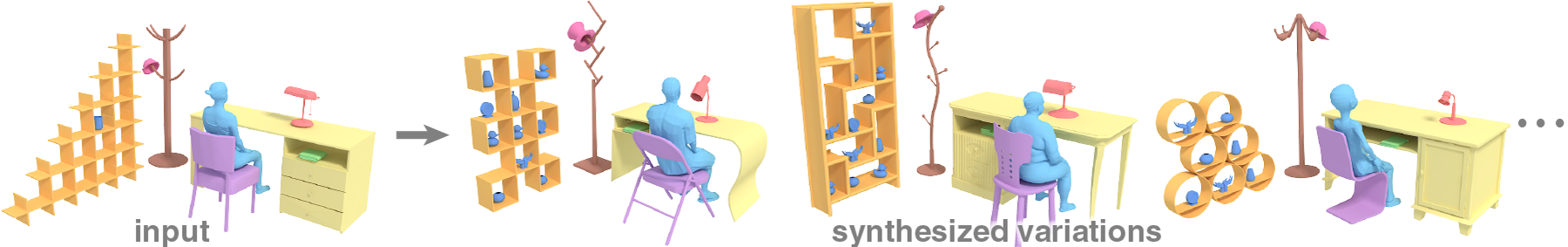

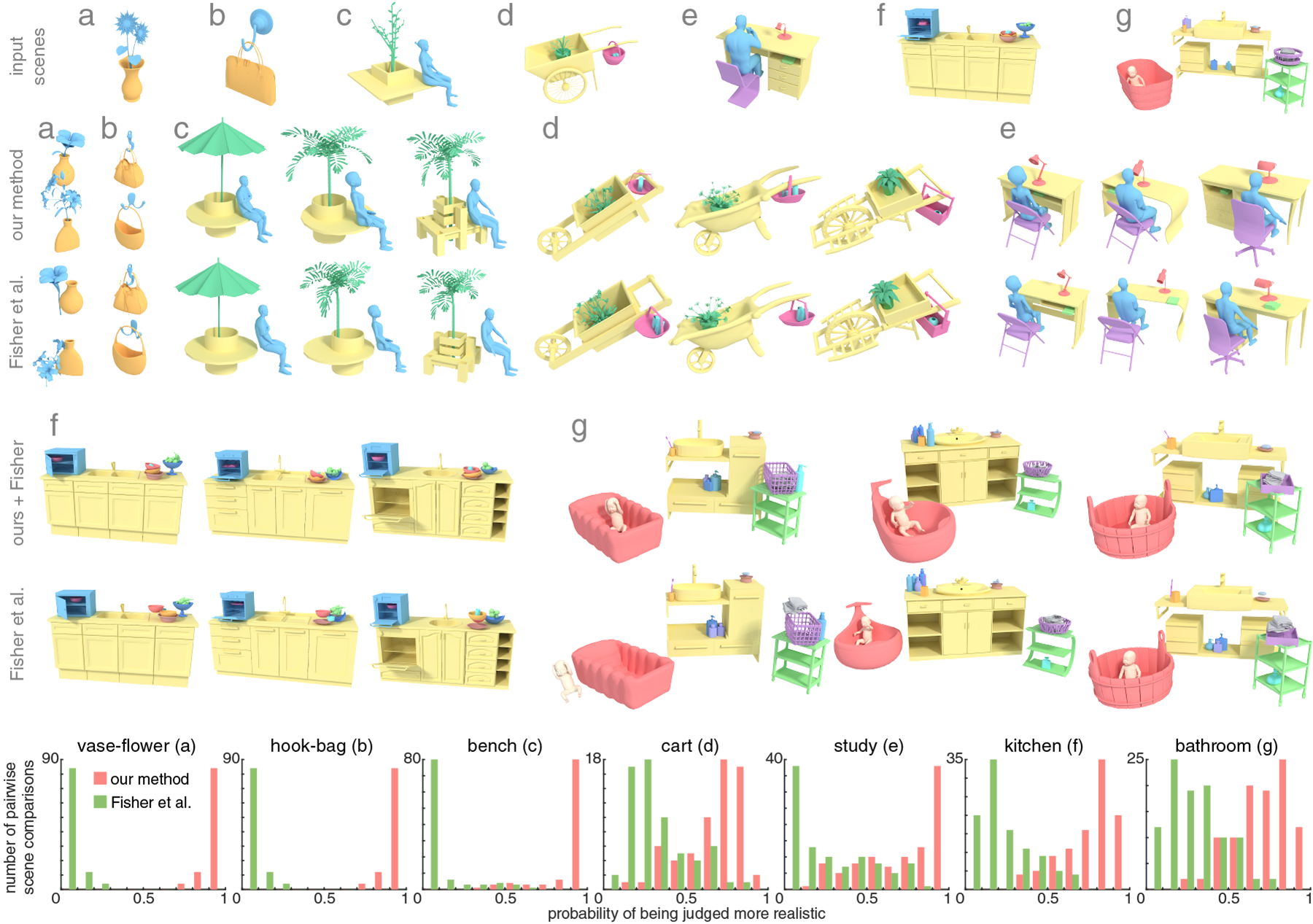

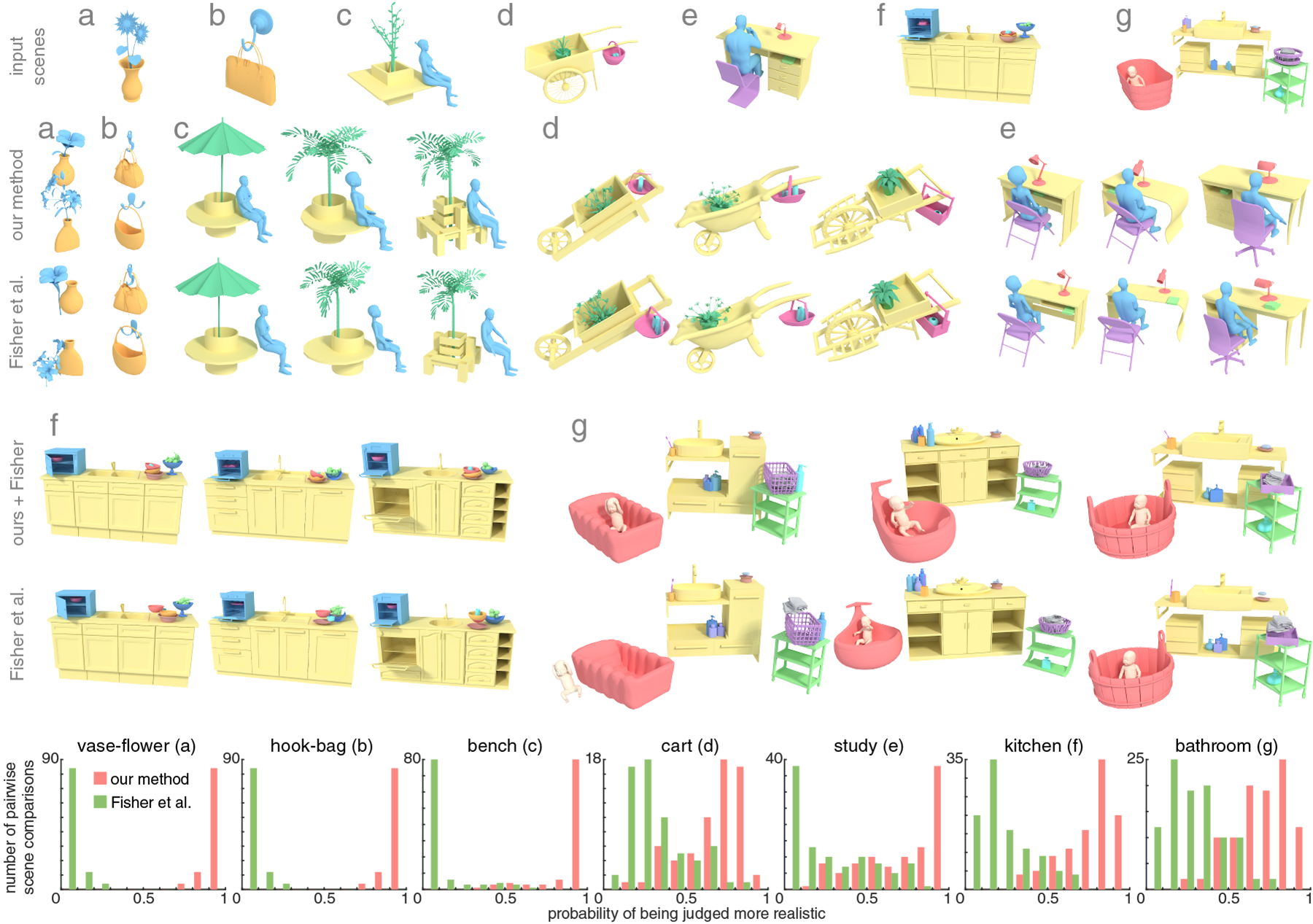

Larger-Scale Synthesis Results

We synthesize scenes from 5 different input exemplars (c-g) and compare to a state-of-the-art scene synthesis method [Fisher et al. 2012]. For completeness, we also include two pairwise synthesis results (a and b). In the top row, we show the input exemplars, followed below by several results from both our and Fisher’s method. Note the geometrically complex relationships, like sitting persons and hanging baskets, that are difficult to handle with previous methods. Results of a user study comparing the plausibility of synthesized scenes is shown in the bottom row. We compute the probability that synthesis results from either our method (red) or Fisher’s method (green) will be judged more realistic by study participants and construct histograms over all pairs of synthesis results in one scene type.

Bibtex

@article{ZhaoEtAl:RelationshipTemplates:2016,

title = {Relationship Templates for Creating Scene Variations},

author = {Xi Zhao and Ruizhen Hu and Paul Guerrero and Niloy J. Mitra and Taku Komura},

year = {2016},

journal = {ACM Trans. Graph.},

publisher = {ACM},

volume = {35},

number = {6},

year = {2016},

issn = {0730-0301},

pages = {207:1--207:13},

articleno = {207},

numpages = {13},

doi = {10.1145/2980179.2982410},

}

Acknowledgements

We thank the anonymous reviewers for their comments and constructive suggestions, and the anonymous Mechanical Turk users. This work was supported in part by the China Post doctoral Science Foundation (2015M582664), the National Science Foundation for Young Scholars of China (61602366), NSFC(61232011, 61602311), Guangdong Science and Technology Program (2015A030312015, 2016A050503036), Shenzhen Innovation Program (JCYJ20151015151249564), the ERC Starting Grant SmartGeometry (StG-2013-335373), Marie Curie CIG 303541, the Open3D Project (EPSRC Grant EP/M013685/1), the Topology-based Motion Synthesis Project (EPSRC Grant EP/H012338/1) and the FP7 TOMSY.

Links

Paper (7.97MB)

Supplementary Material (28.9MB)

Slides (14.1MB)

Data

(coming soon)