Spectral Style Transfer for Human Motion between Independent Actions

- M. Ersin Yumer1

- Niloy J. Mitra2

1Adobe 2University College London

SIGGRAPH 2016

Abstract

Human motion is complex and difficult to synthesize realistically. Automatic style transfer to transform the mood or identity of a character’s motion is a key technology for increasing the value of already synthesized or captured motion data. Typically, state-of-the-art methods require all independent actions observed in the input to be present in a given style database to perform realistic style transfer. We introduce a spectral style transfer method for human motion between independent actions, thereby greatly reducing the required effort and cost of creating such databases. We leverage a spectral domain representation of the human motion to formulate a spatial correspondence free approach. We extract spectral intensity representations of reference and source styles for an arbitrary action, and transfer their difference to a novel motion which may contain previously unseen actions. Building on this core method, we introduce a temporally sliding window filter to perform the same analysis locally in time for heterogeneous motion processing. This immediately allows our approach to serve as a style database enhancement technique to fill-in non-existent actions in order to increase previous style transfer method’s performance. We evaluate our method both via quantitative experiments, and through administering controlled user studies with respect to previous work, where significant improvement is observed with our approach.

Highlights

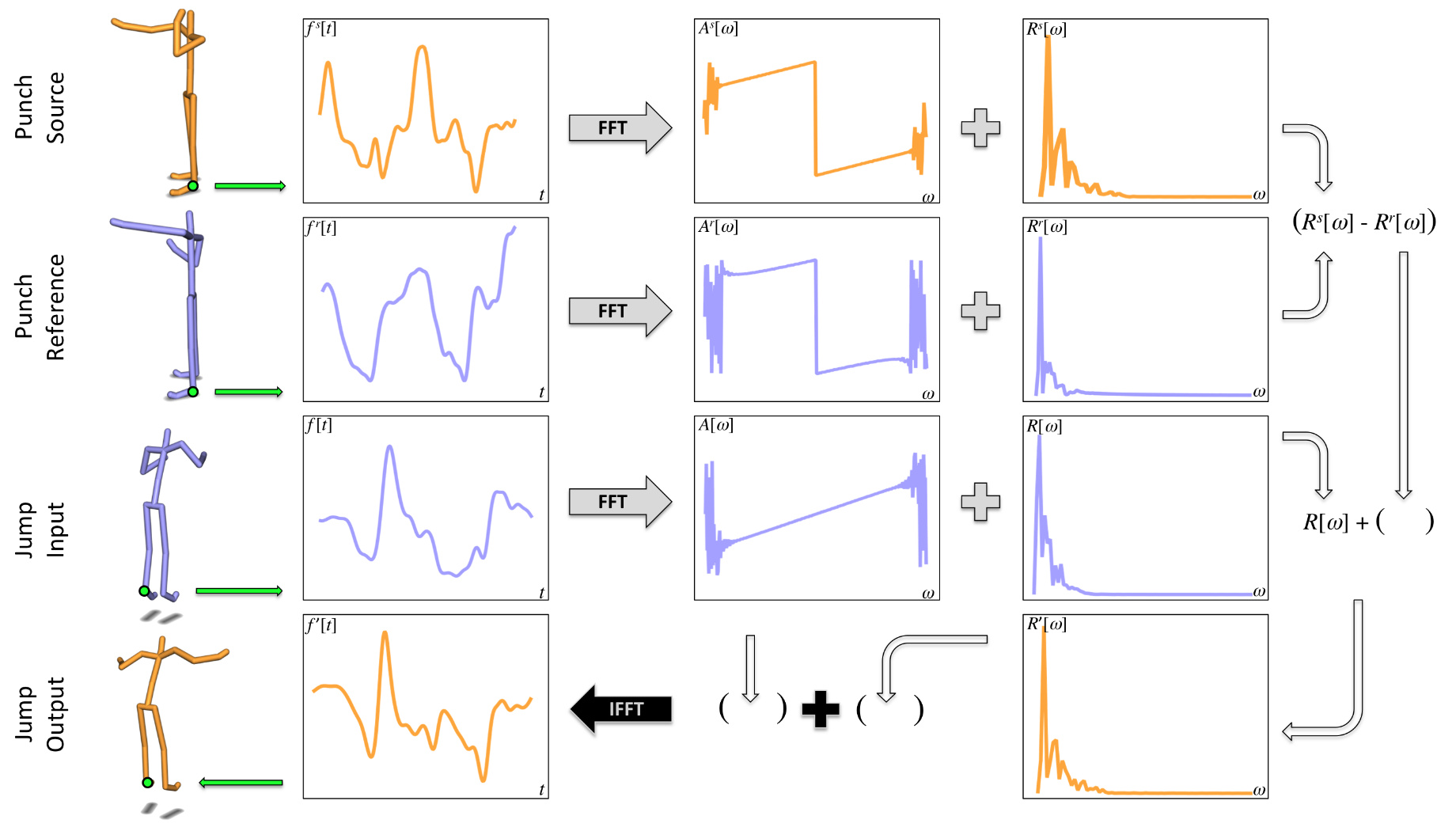

Time domain signals: target f[t], source fs[t], and reference fr[t]. Spectral domain processing: we keep A[ω] constant, and apply the difference of Rs[ω] and Rr[ω] to R[ω] under real-only time-domain signal constraint to compute R0[ω]. Stylized magnitude R0[ω] and constant A[ω] result in the stylized time domain data.

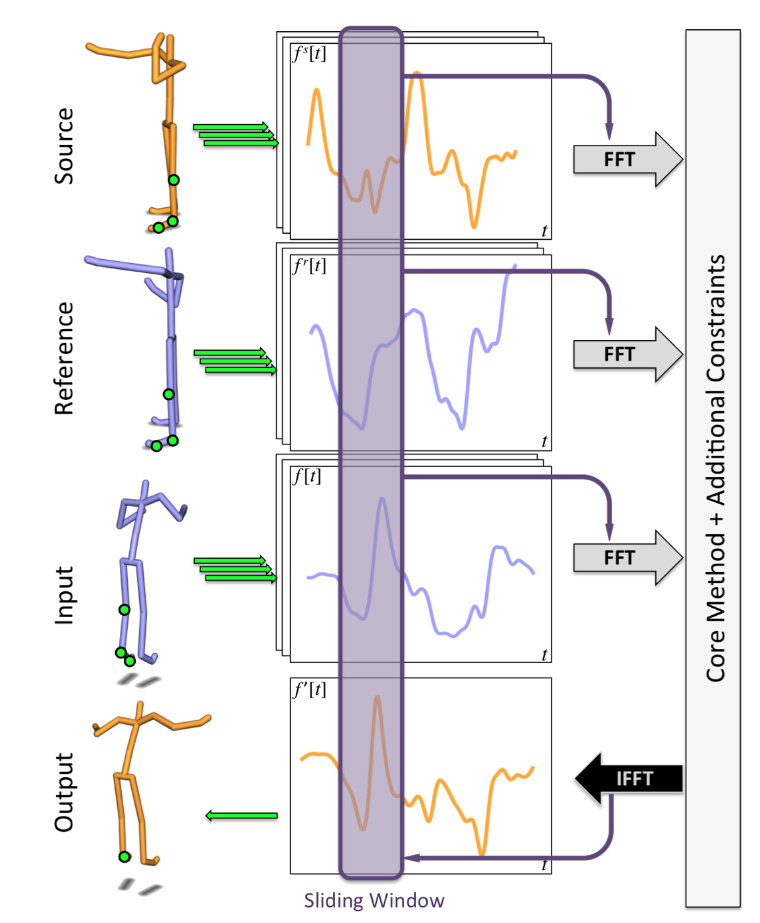

We generalize our core method both to handle arbitrarily long heterogeneous motion sequences by processing through a sliding window filter and to take into account the phase relationship between joints by introducing additional constraints based on parent and children in the skeleton hierarchy (See paper for details).

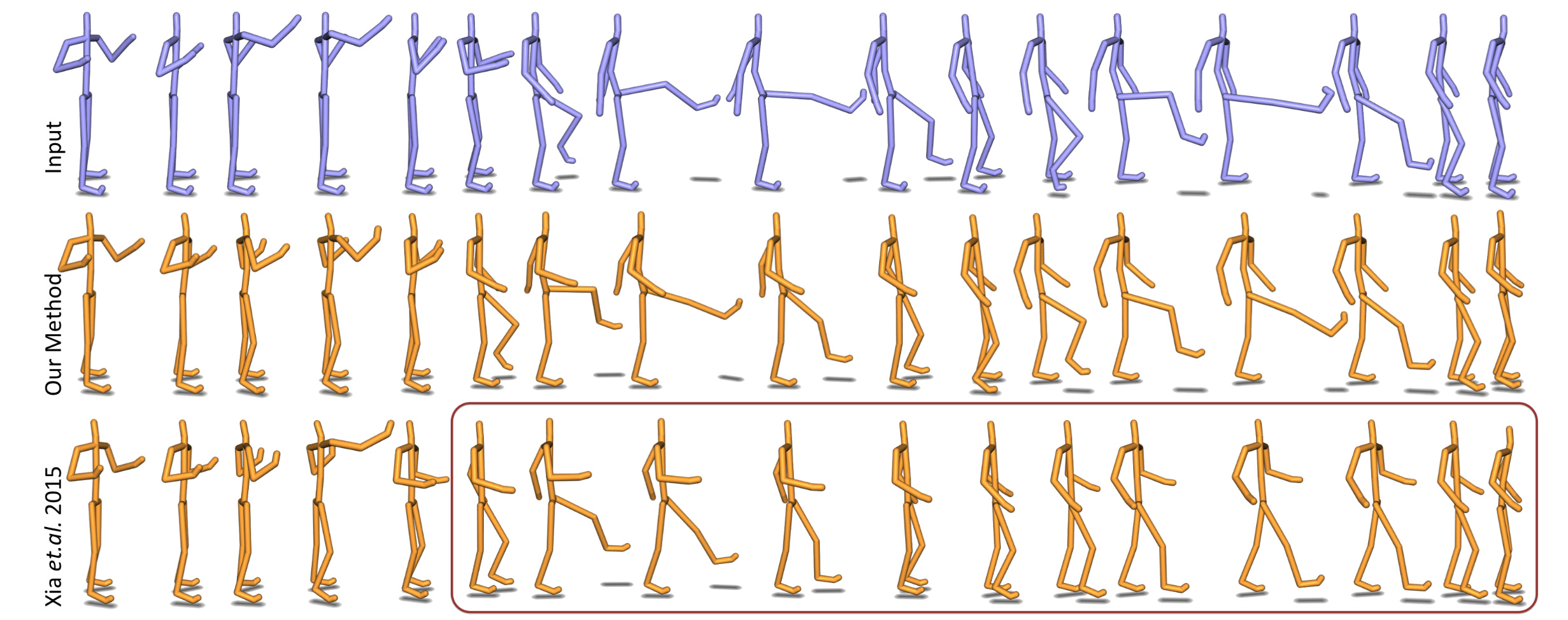

Key frame visual comparison. Real motion capture input in ‘neutral’ style (top). ‘Sexy’ stylization with a limited database of ‘walk’ and ‘punch’ motions: our method (middle),[Xia et al. 2015] (bottom). Note the ‘kick’s being represented similar to ‘walk’ by [Xia et al. 2015] because of the missing ‘kick’ data in the database.

Bibtex

@article{yumer2016spectral,

title = {Spectral Style Transfer for Human Motion between Independent Actions},

author = {Yumer, M. E., and Mitra, N. J.},

journal = {ACM Transactions on Graphics (Proceedings of SIGGRAPH 2016)},

volume = {35},

issue = {4},

pages = {},

year = {2016},

}

Acknowledgements

We thank the authors of [Xia et al. 2015] for sharing their data. This work was partly supported by ERC Starting Grant SmartGeometry (StG-2013-335373), Marie Curie CIG 303541, and Adobe Research fundings.