Sketch2CAD: Sequential CAD Modeling by Sketching in Context

Changjian Li1 Hao Pan2 Adrien Bousseau3 Niloy J. Mitra1,4

1University College London 2 Microsoft Research Asia

3Inria, Université Côte d’Azur 4 Adobe Research

Siggrpah Asia 2020

Abstract

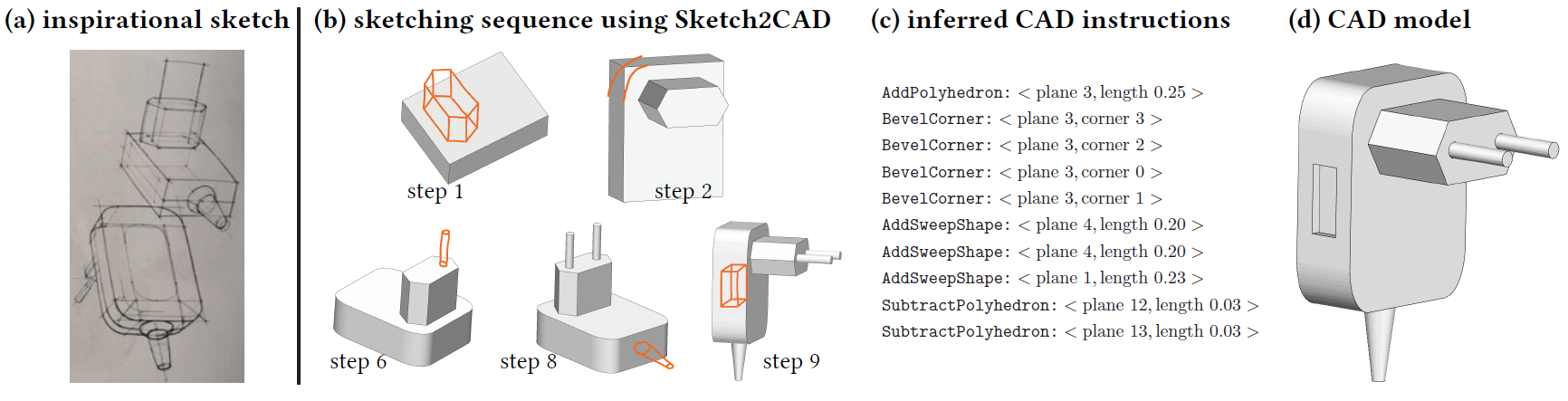

We present a sketch-based CAD modeling system, where users create objects incrementally by sketching the desired shape edits, which our system automatically translates to CAD operations. Our approach is motivated by the close similarities between the steps industrial designers follow to draw 3D shapes, and the operations CAD modeling systems offer to create similar shapes. To overcome the strong ambiguity with parsing 2D sketches, we observe that in a sketching sequence, each step makes sense and can be interpreted in the \emph{context} of what has been drawn before. In our system, this context corresponds to a partial CAD model, inferred in the previous steps, which we feed along with the input sketch to a deep neural network in charge of interpreting how the model should be modified by that sketch. Our deep network architecture then recognizes the intended CAD operation and segments the sketch accordingly, such that a subsequent optimization estimates the parameters of the operation that best fit the segmented sketch strokes. Since there exists no datasets of paired sketching and CAD modeling sequences, we train our system by generating synthetic sequences of CAD operations that we render as line drawings. We present a proof of concept realization of our algorithm supporting four frequently used CAD operations. Using our system, participants are able to quickly model a large and diverse set of objects, demonstrating Sketch2CAD to be an alternate way of interacting with current CAD modeling systems.

Video

Presentation Video

Sketch2CAD Testing

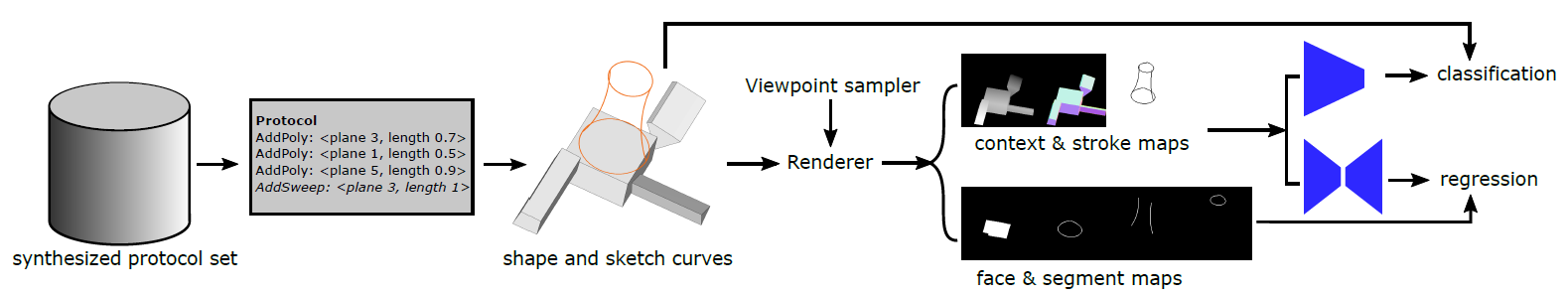

Given an existing shape and input sketch strokes (shown in orange) for the current operation, we first obtain the maps of sketch and local context (i.e., depth and normal), which are fed to the operator classification and segmentation networks. The classified operator type, sweep in this example, is used to select the output base face and curve segmentation maps, based on which the parameters defining the operator are fitted, via an optimization, to recover the sketched operation instance. The recovered operator is then applied to the existing shape to produce the updated model; meanwhile, the operation is pushed into the protocol list.

Sketch2CAD Training

We synthetically generated 10k protocols of diverse lengths for procedurally generating 40k training shapes. For each protocol, we execute it up to the last operation, for which the sketch curves are built and overlaid on the built shape. The sketch curves and existing shape are rendered in proper viewpoints to generate the input sketch and local context maps, as well as the ground truth face and curve segmentation maps, which are used to train the operator classifier and the corresponding segmentation network.

Results Gallery

Various modeling sequences created during design sessions using Sketch2CAD. The corresponding protocol steps are shown in the supplemental material. Please also refer to the supplementary video.

Bibtex

@Article{Li:2020:Sketch2CAD,

Title = {Sketch2CAD: Sequential CAD Modeling by Sketching in Context},

Author = {Changjian Li and Hao Pan and Adrien Bousseau and Niloy J. Mitra},

Journal = {ACM Trans. Graph. (Proceedings of SIGGRAPH Asia 2020)},

Year = {2020},

Number = {6},

Volume = {39},

Pages={164:1--164:14},

numpages = {14},

DOI={https://doi.org/10.1145/3414685.3417807},

Publisher = {ACM}

}

Acknowledgements

The authors would like to thank the reviewers for their valuable and detailed suggestions, the user evaluation participants and Nathan Carr, Yuxiao Guo, Zhiming Cui for the valuable discussions. The work of Niloy was supported by ERC Grant (SmartGeometry 335373), Google Faculty Award and gifts from Adobe, and the work of Adrien was supported by ERC Starting Grant D3 (ERC-2016-STG 714221), research and software donations from Adobe. Finally, Changjian Li wants to thank, in particular, the endless and invaluable love and supports from Huahua Guo over the tough time due to COVID-19.