BigSUR: Large-scale Structured Urban Reconstruction

- Tom Kelly1

- John Femiani2

- Peter Wonka3

- Niloy J. Mitra1

1University College London 2Miami University 3KAUST

SIGGRAPH-Asia 2017

Abstract

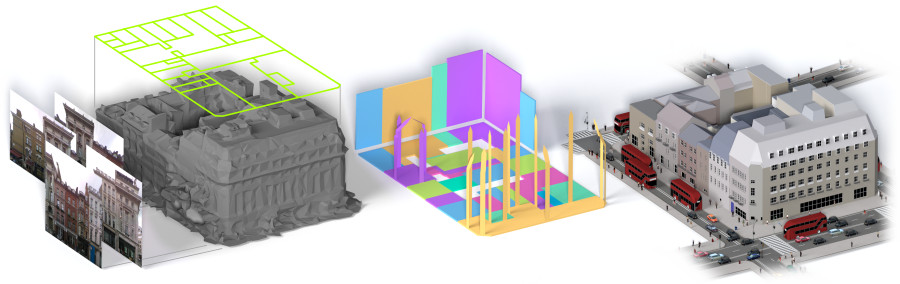

The creation of high-quality semantically parsed 3D models for dense metropolitan areas is a fundamental urban modeling problem. Although recent advances in acquisition techniques and processing algorithms have resulted in large-scale imagery or 3D polygonal reconstructions, such data-sources are typically noisy, and incomplete, with no semantic structure. In this paper, we present an automatic data fusion technique that produces high-quality structured models of city blocks. From coarse polygonal meshes, street-level imagery, and GIS footprints, we formulate a binary integer program that globally balances sources of error to produce semantically parsed mass models with associated façade elements. We demonstrate our system on four city regions of varying complexity; our examples typically contain densely built urban blocks spanning hundreds of buildings. In our largest example, we produce a structured model of 37 city blocks spanning a total of 1,011 buildings at a scale and quality previously impossible to achieve automatically.

Press coverage

xyHt carried an article on BigSUR in 2018.

Paper preview

Images

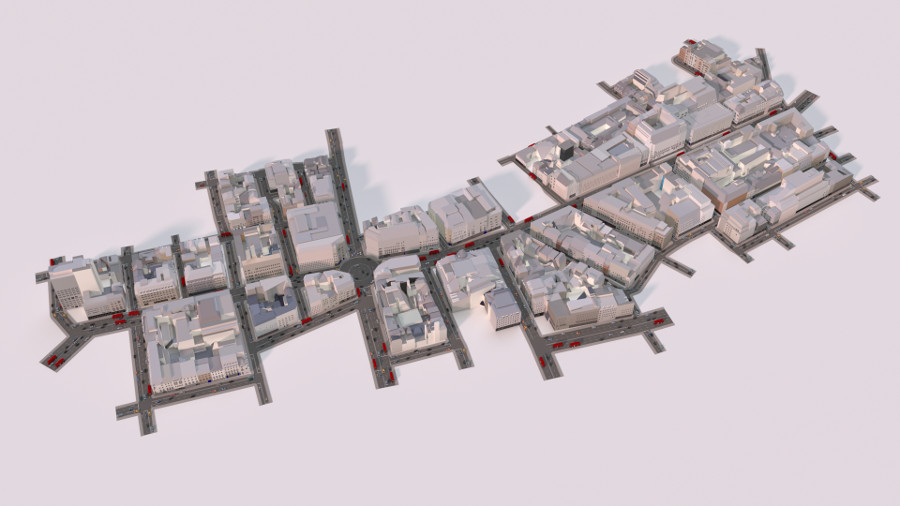

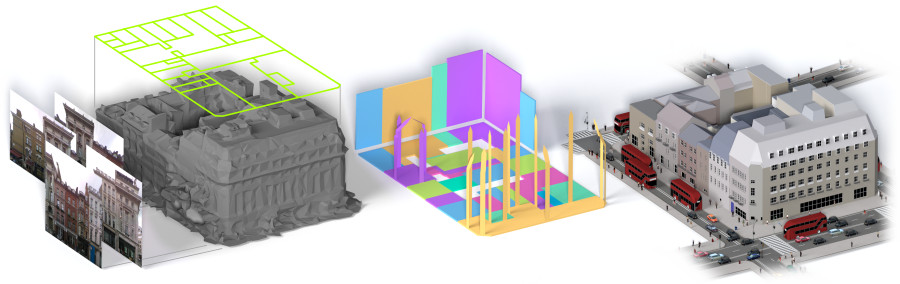

A reconstruction of the area around Oxford Circus in London.

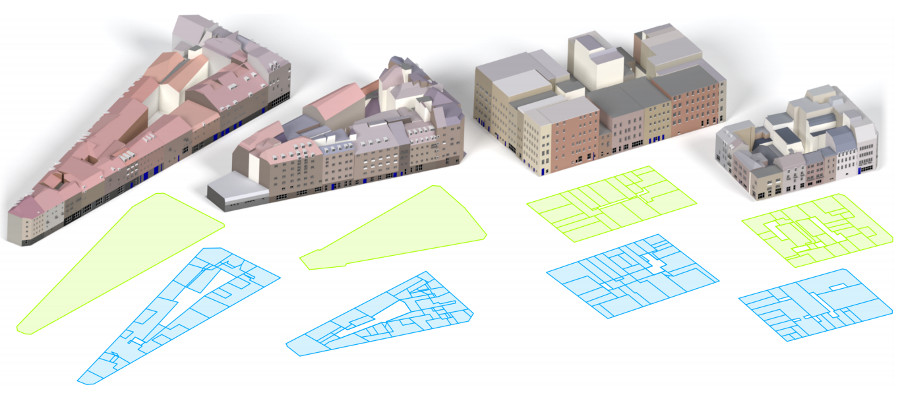

From left to right: Two blocks from Oviedo (Spain), a block from Manhattan (New York), and Regent Street (London).

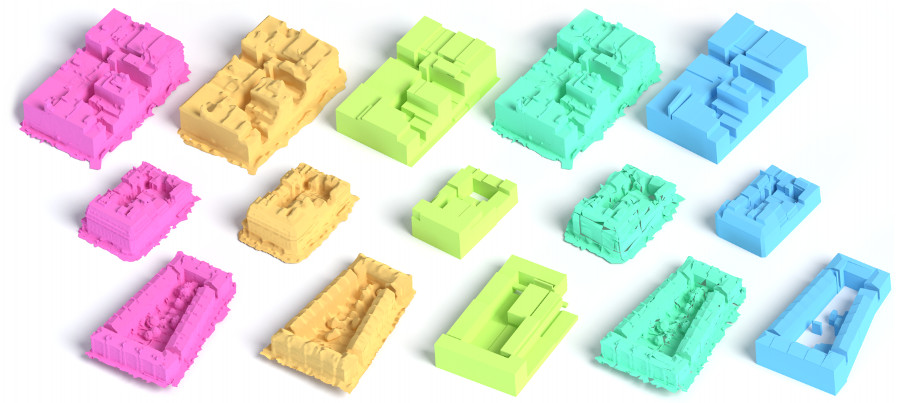

Rows: Data-sets. Left to Right: The original mesh, Poisson reconstruction, Manhattan box fitting (Li et al. 2016), Structure-Aware Mesh Decimation (Salinas et al. 2015), and our technique.

A close up of the Oxford Circus Result.

Bibtex

@article{Kelly:SIGA:2017,

title = {BigSUR: Large-scale Structured Urban Reconstruction},

author = {Tom Kelly and John Femiani and Peter Wonka and Niloy J. Mitra},

year = {2017},

journal = {{ACM} Transactions on Graphics},

volume = {36},

number = {6},

month = November,

year = {2017},

articleno = {204},

numpages = {16},

url = {https://doi.org/10.1145/3130800.3130823},

doi = {10.1145/3130800.3130823}

}

Acknowledgements

We thank Florent Lafarge, Pierre Alliez, Pascal Müller, and Lama Affara for providing us with comparisons, software, and sourcecode, as well as Virginia Unkefer, Robin Roussel, Carlo Innamorati, and Aron Monszpart for their feedback. This work was supported by the ERC Starting Grant (SmartGeometry StG-2013-335373), KAUST-UCL grant (OSR-2015-CCF-2533), the KAUST Office of Sponsored Research (award No. OCRF-2014-CGR3-62140401), the Salt River Project Agricultural Improvement and Power District Cooperative Agreement No. 12061288, and the Visual Computing Center (VCC) at KAUST.