Learning to Predict Motion from Videos via Long-term Extrapolation and Interpolation

- Sébastien Ehrhardt1

- Aron Monszpart 2,3

- Niloy J. Mitra2

- Andrea Vedaldi1

1University of Oxford

2University College London

3Niantic

Abstract

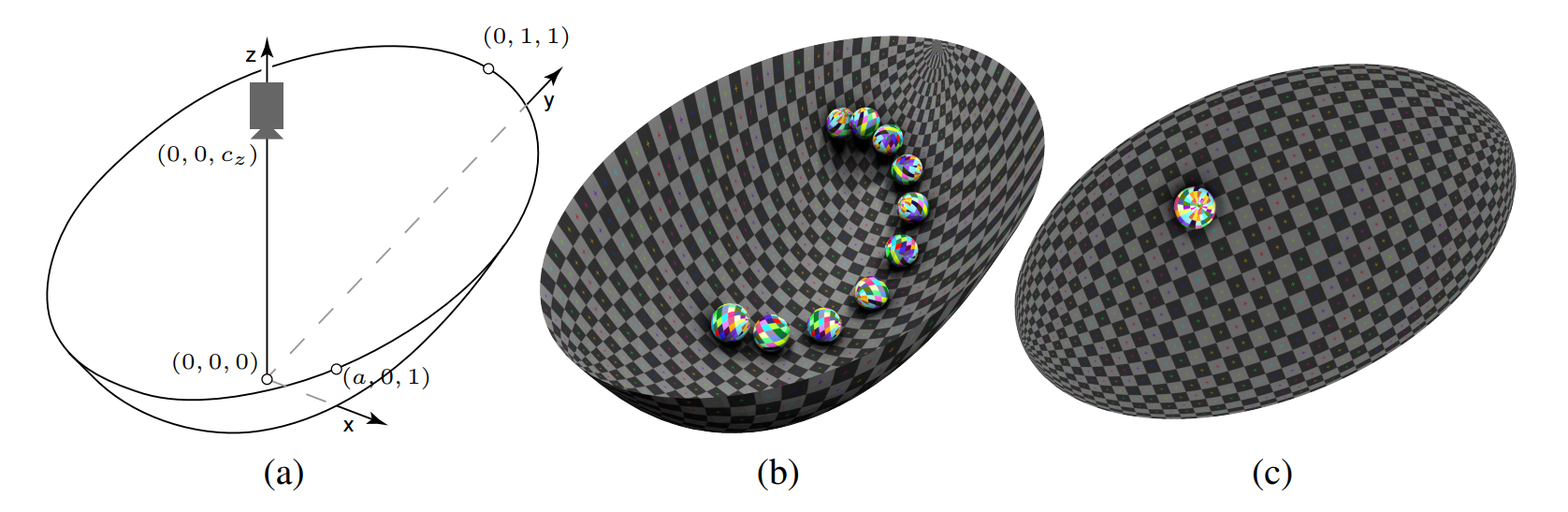

While the basic laws of Newtonian mechanics are well understood, explaining a physical scenario still requires manually modeling the problem with suitable equations and estimating the associated parameters. In order to be able to leverage the approximation capabilities of artificial intelligence techniques in such physics related contexts, researchers have handcrafted relevant states, and then usedneural networks to learn the state transitions using simulation runs as training data. Unfortunately, such approaches are unsuited for modeling complex real-world scenarios, where manually authoring relevant state spaces tend to be tedious and challenging. In this work, we investigate if neural networks can implicitly learn physical states of real-world mechanical processes only based on visual data while internally modeling non-homogeneous environment and in the process enable long-term physical extrapolation. We develop a recurrent neural network architecture for this task and also characterize resultant uncertainties in the form of evolving variance estimates. We evaluate our setup, both on synthetic and real data, to extrapolate motion of rolling ball(s) on bowls of varying shape and orientation, and on arbitrary heightfields using only images as input. We report significant improvements overexisting image-based methods both in terms of accuracy of predictions and complexity of scenarios; and report competitive performance with approaches that, unlike us, assume access to internal physical states.

Bibtex

@ARTICLE{EhrhardtEtAl_PredictionHeightfields_CVIU2019,

author = {{S\'ebastien} Ehrhardt and Aron Monszpart and Niloy {J. Mitra} and Andrea Vedaldi},

title = "{Taking Visual Motion Prediction To New Heightfields}",

journal = {Computer Vision and Image Understanding},

year = 2019

}

@ARTICLE{PredictionHeightfields:emmv:2017,

author = {{S\'ebastien} Ehrhardt and Aron Monszpart and Niloy {J. Mitra} and Andrea Vedaldi},

title = "{Taking Visual Motion Prediction To New Heightfields}",

journal = {arXiv preprint arXiv:1712.09448},

archivePrefix = "arXiv",

eprint = {1712.09448},

year = 2017,

month = dec

}

@ARTICLE{LearningMechanics:emvm:2017,

author = {{S\'ebastien} Ehrhardt and Aron Monszpart and Andrea Vedaldi and Niloy {J. Mitra}},

title = "{Learning to Represent Mechanics via Long-term Extrapolation and Interpolation}",

journal = {arXiv preprint arXiv:1706.02179},

archivePrefix = "arXiv",

eprint = {1706.02179},

year = 2017,

month = jun

}

@ARTICLE{LearningPhysicalPredictor:emmv:2017,

author = {{S\'ebastien} Ehrhardt and Aron Monszpart and Niloy {J. Mitra} and Andrea Vedaldi},

title = "{Learning A Physical Long-term Predictor}",

journal = {arXiv e-prints arXiv:1703.00247},

archivePrefix = "arXiv",

eprint = {1703.00247},

year = 2017,

month = mar

}

Videos

Comparison of the extrapolation capabilities of different systems with and without angular velocity on 8 videos from the validation set.

Extrapolation capabilities of the system trained on a fixed heightfield on 6 example scenes from the validation set. Left column with insets: the first 4 input frames the system sees. Middle image: the system prediction of the object's position having seen the left images only. Right image: ground truth position.